Digital twins to embodied artificial intelligence: review and perspective

Abstract

Embodied artificial intelligence (AI) is reshaping the landscape of intelligent robotic systems, particularly by providing many realistic solutions to execute actions in complex and dynamic environments. However, Embodied AI requires a huge data generation for training and evaluation to ensure safe interaction with physical environments. Therefore, it is necessary to build a cost-effective simulated environment that can provide enough data for training and optimization from the physical characteristics, object properties, and interactions. Digital twins (DTs) are vital issues in Industry 5.0, which enable real-time monitoring, simulation, and optimization of physical processes by mirroring the state and action of their real-world counterparts. This review explores how integrating DTs with Embodied AI can bridge the sim-to-real gap by transforming virtual environments into dynamic and data-rich platforms. The integration of DTs offers real-time monitoring and virtual simulations, enabling Embodied AI agents to train and adapt in virtual environments before deployment in real-world scenarios. In this review, the main challenges and the novel perspective of the future development of integrating DTs and Embodied AI are discussed. To the best of our knowledge, this is the first work to comprehensively review the synergies between DTs and Embodied AI.

Keywords

1. INTRODUCTION

Embodied artificial intelligence (AI) represents a general movement toward realistic systems that require the ability to adapt to dynamic and uncertain situations in the real world [1]. Unlike traditional disembodied AI, which often relies on abstract data processing in cyberspace, Embodied AI emphasizes the importance of physical interaction, perception, and motion in accomplishing multi-objective tasks, including sensing and multi-sensor fusion for Embodied robots, safety navigation and interaction subject to uncertainties and disruptions, and self-supervised learning and reasoning to understand human behaviors [2-4]. Embodied AI requires a huge amount of data generation for training and evaluation in their surrounding environments. In addition, the potential harm to robots in hazardous scenarios and the possible injuries to humans during interactions have led to the current Embodied AI being unable to move beyond simulation environments. Therefore, it is necessary to build a cost-effective simulated environment for the training and optimization of Embodied AI.

Many Embodied simulators have been developed to provide suitable platforms for testing the Embodied AI. Duan et al. provided a comparison review for the current Embodied AI simulators[5]. The comparison study indicated that AI2-THOR [6], Gibson [7], and Habitat-Sim [8] are highly respected and widely adopted in Embodied AI research due to the evaluation features of realism, scalability, and interactivity. Liu et al. provided an overview of various simulators used in Embodied AI research[9]. They mentioned that the growing development of simulators (e.g., RoboGen [10], HOLODECK [11], PhyScene [12], and ProcTHOR [13]) is valuable in Embodied AI research to enhance the generation of interactive, diverse, and physically consistent simulation environments. However, most simulators have limitations. For example, AI2-THOR offers many interactive scenes and its script-based interactions lack physical realism that is only suitable for tasks that do not require highly accurate interactions. Matterport3D [14] is recognized for its foundational role in Embodied AI benchmarks despite its limitations in agent interaction.

There is a significant challenge to achieving Embodied AI, known as the sim-to-real gap, which refers to challenges faced when transferring a model, algorithm, or control strategy that was trained or developed in a simulated environment to a real-world system. Although simulations provide a cost-effective and safe way to test and train intelligent systems, they often fail to capture the full complexity, variability, and unpredictability of real-world environments due to the high cost, risk, and complexity of real-world experimentation. Digital twins (DTs) are important issues in Industry 5.0 [15] and smart manufacturing [16]. DTs enable real-time monitoring, simulation, and optimization of physical processes by mirroring the state and action of their real-world counterparts. Recently, they have become critical tools for improving the efficiency and reliability of robotic systems [17-20]. DTs offer several significant benefits in robotic applications. For instance, DTs can create a virtual representation of physical robots and their environments, which allows for real-time simulation and testing of different scenarios to improve the efficiency and safety of collaborative robotics. In addition, DTs provide a shared visual representation of the physical system, which can enhance the understanding of the system and its behavior among human-robot collaborative tasks.

Recent developments in DTs technology have the potential to fulfill the requirements of Embodied AI for training and optimization in virtual environments while also enhancing real-time response capabilities. Therefore, this review provides an extensive overview of both DTs and Embodied AI and offers new perspectives on how their integration may shape future research. The integration of DTs and Embodied AI presents a promising avenue for advancing intelligent systems, as DTs enable AI agents to test and refine behaviors in digital virtual environments before physical deployment. In addition, human and environmental DTs can broaden the scope of Embodied AI to enhance the human–robot collaboration (HRC) and operational efficiency in large-scale environments. In this review, a comprehensive analysis of DTs is proposed from their conceptual origins to current applications in robotics and beyond. In addition, this review investigates the core components of state-of-the-art Embodied AI. To the best of our knowledge, this is the first comprehensive review of the synergies between DTs and Embodied AI, underscoring how DTs can deliver highly accurate real-time virtual replicas that enable Embodied agents to learn, adapt, and operate effectively in complex environments.

This paper is structured as follows. Section 2 presents the overview of this paper. Section 3 provides the current applications of DTs in robotic systems. Section 4 discusses the key technologies of Embodied AI. Section 5 explores the potential connections between DTs and Embodied AI, examining how they can complement and enhance each other. Section 6 concludes with a perspective on future research directions.

2. OVERVIEW

In this section, the background of DTs is first introduced, the literature review process is then outlined, and the preliminary results are finally presented.

2.1. Backgrounds of DTs

The concept of DTs can be traced back to the 1960s when National Aeronautics and Space Administration (NASA) used virtual simulation technology for testing and simulating spacecraft during the Apollo program [21]. The developed technologies allowed engineers to identify and resolve potential issues before launch, thereby increasing mission success rates. Although the term "Digital Twin" was not used at that time, the tight integration between virtual models and physical objects was already evident [22]. In 2002, Grieves [23] first introduced the concept of the "Digital Twin" to Product Lifecycle Management. DTs technology involves creating a virtual model of a product that spans its entire lifecycle, from design and manufacturing to maintenance. In the 2010s, the rapid development of the Internet of Things (IoT) and Big Data technologies provided powerful support for the application of DTs [24-26]. IoT devices can collect real-time data from physical objects and transmit it to virtual models, enabling real-time updates and simulations. In addition, DTs have become a key technology in Industry 4.0, widely used in smart manufacturing, predictive maintenance, and production optimization [27-29]. Based on real-time monitoring and data analysis, DTs technology significantly improved production efficiency and product quality. In 2012, NASA redefined the concept of DTs as highly accurate simulations that integrate multiphysics and multiscale modeling with probabilistic methods [30]. These DTs provide real-time reflections of their physical counterparts by using historical data, real-time sensor inputs, and detailed physical models to ensure high fidelity and timely updates.

Recently, DTs have become a popular research issue in various industry applications with many novel technologies (e.g., AI, 5G, and blockchain). Huang et al. integrated large language models (LLMs) into DTs to simulate complex scenarios and accurately forecast future conditions with remarkable precision[31]. Rodrigo et al. developed a DT mobile network for 5G and 6G environments based on blockchain technology to consider cybersecurity and Industry 4.0 scenarios[32]. In their network, DTs offer a cost-effective solution to assess performance and make informed decisions. Hasan et al. proposed a blockchain-based process to create DT to ensure the provenance of secure and trusted data[33]. Feng et al. proposed a DT-based intelligent gear health management system to assess the progression of gear degradation with a novel transfer learning algorithm[34, 35]. Zhang et al. proposed a DT-based intelligent diagnosis method for the assessment of bearing conditions with a partial domain adaptation algorithm[36]. Feng et al. proposed a novel DT-enabled domain adversarial graph network to provide effective fault diagnostics of bearings with limited knowledge[37]. Beyond industrial applications, DTs are beginning to demonstrate potential in various other scenarios, including healthcare [38, 39], agriculture [40, 41], and transportation [42, 43]. Table 1 summarizes the highly cited DT papers from the Web of Science (WOS) database over the past five years.

Highly cited DT papers from the WOS database

| Ref. | Year | Application field | Core technologies | Data sources | Results |

| DT: Digital twin; WOS: Web of Science; EMT: electromagnetic transient; PSDT: power system digital twin; DNN: deep neural network; AI: artificial intelligence; UAVs: unmanned aerial vehicles; DL: deep learning; CNN: convolutional neural network; SVM: support vector machine; MRI: magnetic resonance imaging; HRC: human-robot collaboration. | |||||

| Arraño-Vargas and Konstantinou [44] | 2023 | Power systems | Modular framework, real-time model, EMT | Australian National Electricity Market | Developed flexible, robust, cost-effective PSDT framework |

| Lv et al. [45] | 2023 | Manufacturing | Active learning-DNN, domain adversarial neural networks | experimental data | Designed DT-based workshop system for fault diagnosis, trend prediction |

| Mo et al. [46] | 2023 | Manufacturing | Modular AI algorithms, knowledge graph | Simulation data | Enabled dynamic reconfiguration of manufacturing systems |

| Kapteyn et al. [47] | 2022 | UAVs | Reduced-order models, Bayesian state estimation | Structural sensor data from UAV | Developed physics-based DT and library of damaged/pristine UAV components |

| Lv et al. [48] | 2022 | Healthcare | DL, improved AlexNet | Simulation data | Improved transmission delays, energy use, task completion, resource utilization |

| Li et al. [49] | 2022 | Smart cities | Big data, DL, CNN, multi-hop transmission | Wireless sensor network | Provided experimental references for smart city digital development |

| Lv et al. [50] | 2022 | Transportation | DL, CNN, support vector regression | Simulation data | Maintained high data transmission, optimal vehicle path planning |

| Wan et al. [51] | 2021 | Medical imaging | Semi-supervised SVM, improved AlexNet | MRI data from brain tumor dept | Improved model performance compared to other methods |

| Darvishi et al. [52] | 2021 | Sensor technology | Machine learning, fault detection | Air quality, wireless sensor network data | Developed machine-learning-based architecture for sensor validation |

| Malik and Brem [53] | 2021 | HRC | Virtual counterpart, validation | Simulation data | Explored DT opportunities in collaborative production systems |

| Dembski et al. [54] | 2020 | Smart cities | 3D modeling, urban mobility simulation | Sensor network, mobile app data | Enhanced urban planning through participatory and collaborative processes |

| Wu et al. [55] | 2020 | Smart battery | Battery modelling, machine learning | Onboard sensing, diagnostics | Framework for smarter battery control and longer lifespan |

As shown in Table 1, DTs are applied in various domains, including power systems, manufacturing, healthcare, smart cities, transportation, medical imaging, sensor technology, HRC, and smart batteries. The key results highlight significant improvements in system efficiency, predictive capabilities, and operational optimization, underscoring the critical role of DTs in enhancing performance and decision-making across multiple industries. However, the data sources in many studies are still primarily based on simulated data rather than real-time data collected from actual environments.

2.2. Literature review process

To facilitate efficient search and collection of research papers, WOS (https://webofscience.com) and Engineering Village (EI) (http://www.engineeringvillage.com/) were selected due to their comprehensive representation of high-quality peer-reviewed publications in the engineering field. In addition, Google Scholar was selected to collect additional highly cited papers, especially unpublished papers from arXiv (https://arxiv.org/).

The journal publications are collected on the basis of their relevance to DTs and Embodied AI and the Journal Citation Reports in WOS. These categories cover diverse research areas, including information science, automation, bioinformatics, imaging science, industrial manufacturing, electrical and electronic engineering, and robotics. In addition, top-tier AI conferences [e.g., The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) and IEEE/CVF International Conference on Computer Vision (ICCV)] and robotics conferences [e.g., IEEE International Conference on Robotics and Automation (ICRA) and the International Conference on Intelligent Robots and Systems (IROS)] are included.

In this review, two aspects are covered: one for DTs and one for Embodied AI. The search sentence (WOS version) for DTs AND HRC includes (TS = ((digital twin* OR "digital twins" OR "digital-twin" OR "robotic digital twin*" OR "human digital twin*" OR "environmental digital twin*" OR "industrial digital twin*" OR "manufacturing digital twin*" OR "agricultural digital twin*" OR "environmental digital twin*" OR "urban digital twin*") AND (human-robot OR "human robot" OR "human machine" OR interaction OR interactive OR collaboration OR collaborative) AND (industrial OR industry OR manufacturing OR production))) AND (PY = 2020 OR 2021 OR 2022 OR 2023 OR 2024), while the search sentence for embodied AI includes (TS = (("embodied AI" OR "embodied artificial intelligence") AND (sensing OR perception OR "motion planning" OR "motion control" OR learning OR reasoning OR interaction OR collaboration OR train OR "real-world environment"))) AND (PY = 2020 OR 2021 OR 2022 OR 2023 OR 2024).

As a result, 107 relevant papers were selected after filtering out papers not related to the survey topic based on title, keywords, and abstract. In addition, relevant papers are included from the references and support materials have been selected. Thus, a total of 138 papers form the basis of this review paper.

2.3. Preliminary results

During the literature review, several preliminary observations emerged. A major challenge in Embodied AI research is the sim-to-real gap. Many simulation algorithms rely on simplified or predefined models, which often fail to perform adequately in real-world settings. This challenge becomes more pronounced when managing tasks that require large datasets in large-scale cooperative control of robots. In addition, current research on DTs often emphasizes the creation of virtual environments; however, most studies do not extend these virtual spaces for learning, prediction, or inference. As a result, practical strategies or recommendations derived from such DTs have limited impact on real-world scenarios.

This review suggests that an integrated framework that combines DT and Embodied AI could address the limitations observed in both fields. By offering high-fidelity models of real-world environments, DTs can reduce the sim-to-real gap common in Embodied AI. Meanwhile, AI-driven learning and inference mechanisms within DTs can transform virtual environments from passive simulations into data-rich platforms for ongoing training and validation. This synergistic relationship thereby enables continuous refinement of both the physical and virtual components, ultimately fostering adaptive strategies that are more robust and seamlessly transferable to real-world scenarios.

However, despite the promising potential of combining DTs and Embodied AI, this review also identifies several challenges in their integration, which are summarized as follows:

● Data latency and transfer: Large data volumes must travel between physical systems and digital models, causing delays that hinder real-time feedback. Such latency is especially problematic in contexts that require quick responses, such as healthcare or industrial robotics.

● Synchronization issues: Delays or mismatches in updates can result in decisions based on outdated or incorrect data, particularly in fast-changing settings, where continuous synchronization is essential.

● Data privacy: Human-centric applications often rely on personal data such as health metrics or behavioral patterns, introducing privacy risks that require robust security measures without compromising system functionality.

● Scalability of DTs: As systems expand, managing interoperability and resilience becomes increasingly complex. This issue is significant in domains such as smart cities and large industrial networks, which require efficient, adaptable, and secure DT operations.

3. DTS IN ROBOTIC SYSTEMS

This section explores the integration of DTs in robotic systems, covering their historical development, their role in robotic digital twins (RDTs), and applications in industry and HRC. It highlights the key aspects of DTs that are of primary concern: the physical components involved, the simulation platforms used, the methods of data collection, and the services provided by these systems. These factors are crucial in determining the performance and applicability of DTs, making their thorough understanding essential for the advancement of research and practical implementation in this field.

3.1. RDTs

Robots play an increasingly important role in industrial production and manufacturing processes [56]. The integration of robotic systems into industrial processes enables human operators to focus on more strategic and creative functions to deal with complex tasks [57]. RDTs represent a comprehensive convergence of robotics, digital modeling, and real-time data analytics. Figure 1 shows a general example of RDTs. The virtual counterparts of physical robots enable enhanced interaction, monitoring, and control by providing a high-fidelity simulation of the robot's dynamics, kinematics, and operational environment. Based on real-time multi-modal sensor data, RDTs continuously update to mirror the state of their physical counterpart, which allows for predictive maintenance, optimization of tasks, and adaptability to changing conditions. By enabling simulation-driven design, testing, and deployment, RDTs can significantly accelerate the training processes in robotics, which offers a robust platform to validate AI-driven control strategies and ensure operational reliability in real-world applications.

Figure 1. Example of RDT. (A) Multi-modal sensors combine data from multiple sensor types, such as visual, auditory, and tactile inputs, to provide comprehensive and robust environmental perception and enhance the accuracy and reliability of intelligent systems; (B) DTs data include real-time data representing the physical and functional characteristics of a physical robot; (C) DTs create a virtual representation of a physical robot and enable real-time simulation of its physical counterpart; (D) DTs simulate the physical characteristics, environment, and operating conditions of robots to enable robots to train and learn in a virtual environment; (E) DTs provide real-time data and insights, which are crucial for making informed decisions in the complex environment; (F) DTs help optimize the execution of complex tasks by identifying the most effective sequences and methods. RDT: Robotic digital twin; DTs: digital twins.

An important characteristic of RDTs lies in their capacity to accurately simulate the dynamics of robotic systems. Achieving this requires high-precision modeling of the robotic system, often utilizing industrial software such as CAD [58] or game engines such as Unreal [59]. Table 2 summarizes current RDTs research focusing on their physical and virtual components, data collection methods, and services. Sun et al. developed a learning-based framework for complex assembly tasks based on a multi-modal DT environment that integrates visual, tactile force, and proprioception data[60]. By collecting VR-based demonstration data and constructing a skill knowledge base through multi-modal analysis, they enable the transfer of learned assembly skills from the digital realm to real-world settings. Xu et al. proposed a cognitive DTs framework for multi-robot collaborative manufacturing, where an incomplete multi-modal Transformer with deep autoencoder enhances cognition of the system by efficiently handling incomplete data[61]. This multi-modal approach improves robustness and adaptability in DTs environments. Liu et al. proposed a high-fidelity modeling approach and architecture for RDT to enhance real-time monitoring and intelligent applications[62]. By closely aligning virtual and physical systems, this framework offers advantages in transparent management, comprehensive simulation, and data visualization. However, potential drawbacks include high computational demands, scalability challenges, and complexities in adapting the model to diverse industrial environments. Li et al. proposed a DTs-based synchronization architecture for inspection robots[63]. The robotic system is based on SLAM for constructing real-world maps, while wireless communication ensures smooth data transmission and robot synchronization, allowing for real-time movements and algorithm training in the virtual environment. Liang et al. developed a real-time DT system to provide high accuracy in trajectory planning and execution with real-time synchronization between the simulated and physical robots[64]. While the system successfully bridges virtual and physical environments in real time, challenges related to system complexity and potential latency in data transmission could restrict scalability and performance in more dynamic and large-scale construction settings. Zhang et al. presented a novel high-fidelity simulation platform for robot dynamics in a DTs environment[65]. This approach provides accurate control logic mirroring real systems, simplifies algorithm development, and supports simultaneous testing of real and simulated environments. However, implementing detailed dynamic models may lead to increased computational costs and complexity, particularly when scaling to larger or more varied industrial settings. Liu et al. developed a DT-enabled approach for transferring deep reinforcement learning (DRL) algorithms from simulation to physical robots[66]. This approach uses DTs to correct real-world grasping points, enhancing the accuracy of robotic grasping and validating the effectiveness of sim-to-real transfer mechanisms. Mo et al. proposed Terra, a smart DT framework that employs a multi-modal perception module to capture and continuously update real-time environmental and robot states[67]. This multi-modal approach enriches the DT representation, enabling on-the-fly policy updates and real-time feedback loops between virtual and physical spaces. However, while Terra demonstrates efficacy in an obstacle-rich environment with a simple robot, its scalability may pose limitations when adapting to more complex or resource-constrained scenarios. Cascone et al. created VPepper, a virtual replica of the Pepper humanoid robot, to improve interaction with smart objects in a smart home environment, allowing for intensive machine learning training without risking physical robot deterioration[68]. In addition, Xu et al. introduced a DT-based industrial cloud robotics framework that integrates high-fidelity digital models with sensory data, encapsulating robotic control capabilities as robot control as-a-service to ensure accurate synchronization and interaction between digital and physical robots[69].

Summary of recent RDTs

| Ref. | Year | Physical part | Virtual platform | Data collection | Service |

| RDTs: Robotic digital twins; DT: digital twins; ROS: robot operating system; RGB: red-green-blue. | |||||

| Sun et al. [60] | 2024 | Robotic arm | Unity 3D | Visual, tactile force, and proprioception data | Transfer knowledge to the robot based on the multi-modal DT environment |

| Xu et al. [61] | 2024 | UR5 | ROS and URScript | 8-modality data from the system | Improve the robustness based on the incomplete multi-modal dataset |

| Liu et al. [62] | 2023 | SD3/500 robotic arm | Unity 3D, Pixyz plugin and MATLAB | Robot operational data | High-fidelity modeling approach and architecture for DTs of industrial robots |

| Li et al. [63] | 2022 | Car-like robot | Unity 3D and ROS | liDAR data and 3D point cloud | Enable simultaneous mapping, data interaction, and motion control between the physical and virtual robots |

| Liang et al. [64] | 2022 | KUKA KR120 robotic arm | Gazebo | Transmission times, joint angles, and end-effector poses | Ensure minimal mean square errors between the virtual and physical robots |

| Zhang et al. [65] | 2022 | Kinova Gen3 robot and the MPS station | Festo CIROS and MATLAB | Optic, magazine and terminal sensors | High-fidelity representations of robot dynamics |

| Liu et al. [66] | 2022 | Dobot Magician (robotic arm) | V-REP PRO EDU 3.6.2 | RGB images, depth images | Real-time perception, grasp correction, sim-to-real transfer |

| Mo et al. [67] | 2021 | Custom-built robot | Unity 3D, PhysX physics engine | Multiview cameras | Real-time monitoring, intelligent perception, feedback loops for policy updates |

| Cascone et al. [68] | 2021 | Pepper (humanoid robot) | Unity 3D | Cameras, infrared, laser, sonar sensors | Real-time monitoring, object recognition, safe object handling, emergency intervention |

| Xu et al. [69] | 2021 | KUKA KR3 R540 and KR6 R700 | JavaScript and C-based simulation platform | Speed, acceleration, position | Real-time monitoring, remote control, robotic disassembly operations |

| Kaigom and Roßmann [70] | 2021 | KUKA LWR, IIWA, and KUKA KR 16 | Tecnomatix, ROS, Unity 3D and CIROS | Joint torques, velocities, and other sensor data | Motion optimization, decision-making |

As shown in Table 2, the results show that platforms such as Unity 3D are widely used due to their versatility in supporting real-time monitoring, object recognition, and policy updates. Other platforms, such as V-REP and PhysX physics engines, focus on precise tasks such as grasp correction and simulation-to-reality transfer. Physical components such as KUKA industrial robots are often paired with advanced platforms such as ROS and Tecnomatix, enabling motion optimization and decision making in industrial settings. Meanwhile, Pepper humanoid robots and custom-built robots are used with Unity 3D for intelligent perception and emergency intervention in HRC scenarios. This analysis highlights the adaptability of Unity 3D, making it a preferred choice across various applications.

3.2. RDTs in industrial applications

In industrial applications, RDTs play a crucial role in optimizing manufacturing processes, improving efficiency, and reducing costs. The robot modeling involves managing the robot system in the 3D environment [71]. However, a simple virtual visualization does not suffice to define the RDTs. Crucially, RDTs should have the ability to learn and optimize in response to varying tasks and environmental uncertainties, thereby allowing dynamic adjustments to the physical robotic system. Zhang et al. also proposed a novel DT modeling method for large-scale components in robotic systems, using a knowledge graph for knowledge representation and function blocks for multi-source data integration[72]. Zhang et al. developed a generic architecture for constructing comprehensive industrial robot DT based on an ontology information model to address scalability and reusability challenges[73]. The ontology information model is a structured framework that represents knowledge about a particular domain using a formal ontology. In manufacturing, Chen et al. introduced a multi-sensor fusion-based DT for quality prediction in robotic laser-directed energy deposition additive manufacturing[74]. This method synchronizes multi-sensor features with real-time robot motion data to predict defects such as cracks and keyhole pores. Furthermore, Li et al. developed a DT model to automate the assembly process of the PCB kit box build, incorporating a symmetry-driven method to optimize pose matching and a quality prediction model using small displacement torsor (SDT) and Monte Carlo methods[75]. Liu et al. used DTs and a deep Q-learning network to dynamically optimize robotic disassembly sequence planning under uncertain missing conditions[76]. Wenna et al. proposed a DT framework for 3D path planning of large-span curved-arm gantry robots, integrating bilateral control, 3D mesh construction, and NavMesh-based multi-objective path planning to enhance safety and real-time performance[77]. Hu et al. proposed a grasps-generation-and-selection convolutional neural network trained and implemented in a DT of intelligent robotic grasping to identify optimal grasping positions[78]. Tipary and Erdős facilitated the iterative refinement of work cells in both digital and physical spaces, accelerating the commissioning and reconfiguration processes while minimizing physical work, demonstrated through a pick-and-place task and reconfiguration scenario[79].

As shown in Table 3, Unity 3D emerges as a widely used platform, providing capabilities such as 3D path planning, motion analysis, and dynamic sequence planning, which are critical for industrial tasks. Platforms such as MATLAB and MWorks are employed for predictive maintenance and lifecycle management, while ROS and OpenCASCADE focus on real-time monitoring and adaptive control. Physical components such as KUKA and UR5 robotic arms are commonly used, supporting tasks such as grasp planning, path optimization, and collision detection. Advanced data collection methods, including RGB-D sensors, infrared cameras, and 3D position data, enhance the accuracy and efficiency of these applications. Additionally, Neo4j and Leica AT960 LT are used for large-scale measurements and assembly simulations, emphasizing data exchange and analysis.

Summary of recent RDTs in industrial applications

| Ref. | Year | Physical part | Virtual platform | Data collection | Service |

| RDTs: Robotic digital twins; ROS: robot operating system; CCD: charge-coupled device; RGB: red-green-blue-depth. | |||||

| Zhang et al. [72] | 2024 | St#228;ubli TX200 | Neo4j | Leica AT960 LT for large-scale measurements | Data exchange, assembly simulation, accuracy analysis |

| Zhang et al. [73] | 2023 | KUKA KR210 R2700 | MATLAB, MWorks, Unity 3D | Torque, velocity, positional data | Real-time monitoring, control, predictive maintenance, lifecycle management |

| Chen et al. [74] | 2023 | KUKA 6-axis robot | ROS | Infrared camera, CCD, microphone, laser sensor | Real-time monitoring, defect prediction, adaptive control |

| Li et al. [75] | 2023 | 6-DOF manipulator | Rviz, Gazebo, OpenCASCADE | RealSense RGB-D, 3D sensors | Data exchange, assembly simulation, accuracy analysis |

| Liu et al. [76] | 2023 | KUKA LBR iiwa 14 R820 | Unity 3D | 3D point clouds, multi-perspective depth images | Real-time monitoring, dynamic sequence planning, disassembly |

| Wenna et al. [77] | 2022 | Gantry robot | Unity 3D | 3D position data | 3D path planning, motion analysis, bilateral control |

| Hu et al. [78] | 2022 | UR5E 6-DOF robotic arm | Bullet physics engine | RGB-D images | Grasp planning, quality evaluation, execution |

| Tipary and Erdos [79] | 2021 | UR5 robotic arm, Robotiq 2F-85 gripper | LinkageDesigner, PQP, V-Collide | 2D camera | Path planning, collision detection, task-specific uncertainty resolution |

3.3. RDTs in HRCs

HRC is increasingly driven by the development of collaborative robots designed specifically to work alongside human operators [80]. Unlike traditional robots, which typically function in isolated environments, cobots are equipped with advanced sensors and AI capabilities that enable them to interact safely and effectively with human partners. These robots are frequently used to perform repetitive, hazardous, and physically demanding tasks, improving both safety and productivity in the workplace [81-83]. In the broader context of industrial evolution, Industry 5.0 is emerging as a new paradigm that emphasizes the central role of humans in industrial and manufacturing processes [18]. Unlike traditional industrial robots, which focus primarily on optimizing system performance by improving output and reducing costs [84, 85], Industry 5.0 advocates the integration of human skills, creativity, and decision-making abilities with advanced technologies. This human-centric approach fosters innovation by combining the strengths of humans and robots in collaborative settings [86-88].

In the context of HRC, DTs are increasingly being used to enhance safety, efficiency, and overall user experience, as shown in Table 4. Park et al. proposed a DTs-based framework that used an exoskeleton-type robotic system to improve manufacturing processes[89]. This framework provides an efficient method by which robotic systems can learn knowledge and skills from the virtual representation of collaborative robots and operators. In the real HRC environment, the human operator wears the VR device and the exoskeleton robot to work with the virtual robot within the DTs. At the same time, the real robot performs the given task and transmits the sensor information to the virtual representation. Wang et al. introduced a deep learning (DL)-enhanced DTs framework to improve safety and reliability in HRC[90]. This framework enables intelligent decision-making by accurately detecting and classifying human and robot actions. In their real-world experiments, the robotic system was trained to detect human collisions based on information from virtual DTs, which might avoid potential dangers to the human operator. Choi et al. developed an integrated mixed reality (MR) system for HRC that presents a fast and accurate 3D offset-based safety distance calculation[91]. In their test, the safety distance can be measured based on the RDT and the human skeleton instead of using 3D point cloud data. Furthermore, Kim et al. proposed a DT-based method for training cobots in virtual environments using synthetic data and the point cloud framework, addressing the challenges of time and cost constraints in real-world training[17]. Lastly, Malik and Brem explored the potential of DTs to enhance HRC in assembly processes by developing a DTs-based virtual counterpart for validation and control of a physical HRC system throughout its life cycle, discussing the building blocks and advantages of this approach[53].

Summary of the recent RDTs in HRC

| Ref. | Year | Physical part | Virtual platform | Data collection | Service |

| RDTs: Robotic digital twins; HRC: human-robot collaboration; RL: reinforcement learning; RGB-D: red-green-blue-depth. | |||||

| Park et al. [89] | 2024 | Doosan Robotics (6-DOF arm) | Unity 3D | Force, torque sensor | Real-time monitoring, path planning, collision detection, task execution |

| Wang et al. [90] | 2024 | Universal Robots UR10 | Unreal Engine 4 | Kinect V2 for images, depth data | Real-time monitoring, safety decision-making, task execution |

| Kim et al. [17] | 2024 | Universal Robots UR3 | RoboDK, machine learning toolkit | 3D cameras (Intel RealSense D435) | Object detection, gripping action optimization (RL), path planning |

| Choi et al. [91] | 2022 | Universal Robots UR3 | Unity 3D | Azure Kinect depth sensors, RGB-D sensors | Real-time monitoring, safety distance calculation, task assistance |

| Malik and Brem [53] | 2021 | Universal Robot UR5 | Tecnomatix Process Simulate | Proximity, photoelectric, property, joint value sensors | Real-time monitoring, task allocation, collision detection, path optimization |

As shown in Table 4, platforms such as Tecnomatix Process Simulate emphasize task allocation and path optimization, while Unreal Engine 4 is utilized for safety decision-making and task execution. RoboDK, combined with machine learning toolkits, focuses on object detection and action optimization based on reinforcement learning. Physical components, including Universal Robots (UR3, UR5, UR10) and Doosan Robotics arms, are equipped with advanced data collection systems such as Azure Kinect depth sensors, RGB-D sensors and 3D cameras. These systems enable precise force measurement, proximity sensing, and joint value monitoring, enhancing real-time task execution and safety in HRC scenarios.

4. KEY TECHNOLOGIES IN EMBODIED AI

Embodied AI enables intelligent agents (e.g., robots) to perceive, act, and learn in real-world environments. The successful deployment of Embodied AI systems depends on several key technologies, including perception, interaction, learning, and motion control. Although these technologies have significantly advanced embodied intelligence, they also face notable limitations, such as the sim-to-real gap, sensor inaccuracies, and challenges in real-time adaptation. This section provides a comprehensive review of the strengths and weaknesses of current Embodied AI technologies, identifying critical gaps that hinder their real-world implementation. In Section 5, it will explore how DTs can address these limitations by providing real-time virtual environments, improving data fidelity, and improving system adaptability for robust and scalable Embodied AI applications.

4.1. Sensing and perception

Sensing and perception are fundamental to Embodied AI, which is a prerequisite for allowing agents to understand and interpret the work environment. Embodied AI systems typically employ multi-modal sensors, such as cameras, LiDAR, sonar, and tactile sensors, to capture diverse data streams. By integrating information from multiple sensor modalities, Embodied AI agents can build a comprehensive representation of the environment to provide accurate decision-making and action, as shown in Figure 2.

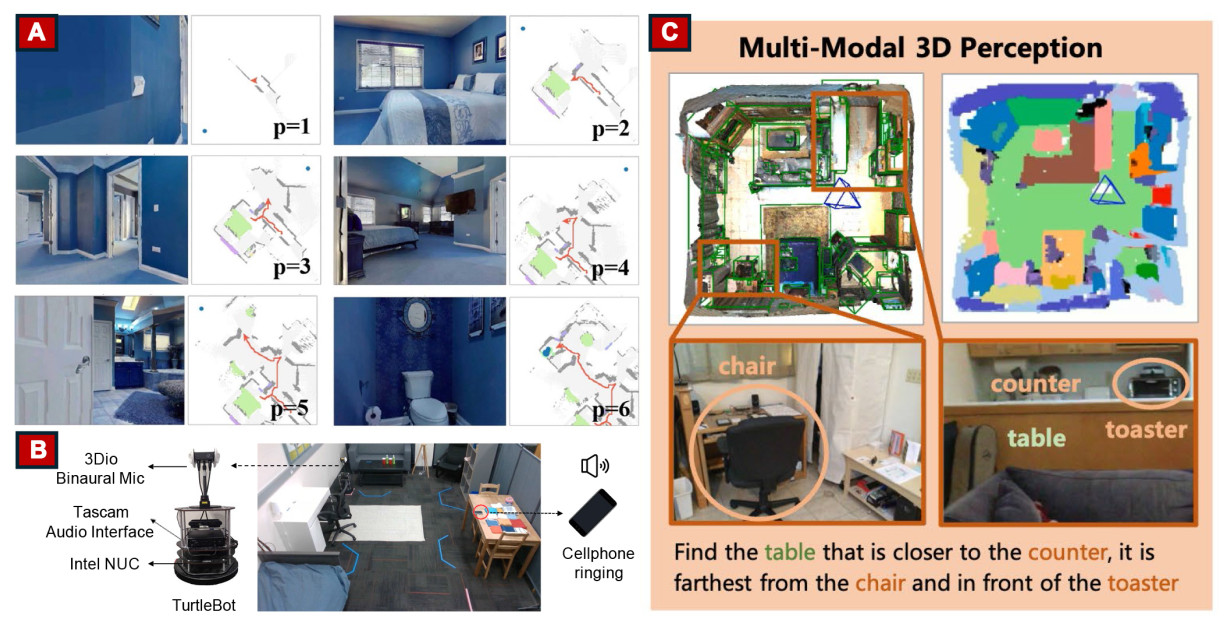

Figure 2. Examples of embodied perception. (A) The first perspective views of perception and bird-eye views of the robot when detecting the environment[92]; (B) TurtleBot platform that can collect both image and voice information[93]; (C) EmbodiedScan that benchmarks language-grounded holistic 3D scene understanding capabilities[94].

In the realm of depth perception and environmental understanding, He et al. focused on enhancing depth perception in dynamic physical environments by proposing a time-aware contrastive pre-training method[95]. This approach utilizes temporal similarities between frames to generate robust depth representations, allowing agents to better understand and interact with their surroundings across various domains. However, the significant computational resources required for pre-training, especially with large datasets such as UniRGBD, may limit its applicability in resource-constrained scenarios. Similarly, Jing and Kong introduced a dynamic exploration policy for embodied perception, which guides agents to efficiently gather training samples in new environments[96]. This method, while effective, faces challenges related to the computational cost of updating 3D semantic distribution maps and the reliance on depth sensors, which may limit its performance in environments with poor depth data or complex surfaces.

Focusing on communication and interpretability, Patel et al. explored the interpretability of communication between embodied agents and their underlying perception of the physical space around them[97]. They developed communication strategies tightly linked to the perceptions of agents, improving the understanding of the environment. However, the unstructured communication approach, while flexible, often results in less efficient and harder-to-interpret messages, reducing overall performance. The study highlights a gap between the performance of structured communication and the ideal scenario, indicating room for improvement in optimizing communication strategies for complex real-world tasks. In the area of object detection and forecasting, Peri et al. addressed the often-isolated study of these problems by proposing the FutureDet method, which reframes trajectory forecasting as future object detection based on LiDAR data[98]. While effective in handling linear and stationary objects, the method shows limitations in dealing with non-linear trajectories, potentially leading to overfitting in constant velocity scenarios. This limitation highlights the need for further refinement to enhance the adaptability to more dynamic and unpredictable environments. Guo et al. proposed an object-driven navigation strategy based on active perception and a semantic association model using RGB-D sensors, achieving improved navigation success and efficiency in dynamic environments[92].

When it comes to multi-modal perception and simulation, Gao et al. introduced the Sonicverse platform, which allows agents to perceive their surroundings using both sight and sound within a simulated environment[93]. Despite its potential, the high computational intensity required to render high-fidelity audio and visual simulations in real time may limit its accessibility for some applications. In addition, the platform excels in static environments, but dynamic changes present challenges, particularly in simulating complex interactions between moving objects and varying sound sources. Complementing this, Wang et al. presented the EmbodiedScan dataset, a comprehensive multi-modal 3D perception dataset that integrates visual, depth, and language data to facilitate holistic 3D scene understanding[94]. Although the dataset improves the ability of embodied agents to interpret and interact with complex indoor environments, the computational complexity of processing and integrating the diverse data streams poses challenges, especially in real-time applications.

4.2. Motion planning and control

Motion planning and control are fundamental components of Embodied AI, enabling robotic systems to navigate through and interact with their environments effectively. These processes can be broadly categorized into hierarchical and optimization-based planning, morphology-based control, and vision or transformer-based planning approaches, as shown in Figure 3.

Figure 3. Examples of embodied planning and control. (A) Safe collision-avoidance embodied navigation[99]; (B) Embodied motion control for the dual-robot system of the bionic robot controller[100]; (C) Example of motion planning for response to the operator's request.[101]; (D) Skill Transformer is a unified policy model that directly maps visual observations (pixels) to actions, enabling a robot to autonomously perform complex, multi-step tasks such as mobile manipulation by inferring the required skills and executing precise control actions[102].

Hierarchical structures and optimization techniques are used to achieve effective motion planning and control. These approaches often involve breaking down complex tasks into manageable sub-tasks or optimizing specific criteria to ensure efficient navigation and interaction with the environment. Gan et al. proposed a hierarchical planning and control method utilizing RGB-D sensors for task and motion planning in dynamic environments[99]. Although ThreeDWorld provides high-fidelity physical environments, the paper does not address limitations related to computational resources and real-time performance, which may affect its feasibility for large-scale applications. In addition, experiments also do not fully explore the diversity of physical properties in the environment, such as friction and gravity, and their potential impact on task performance. Li et al. introduced an optimization-based trajectory planning method based on vehicle kinematics and geometry[103]. The model achieves a good balance between time optimality and safety, with a denser distribution of discrete points in bottleneck areas. However, the simulations mainly focus on simple static obstacle environments, without considering dynamic obstacles or larger and more complex real-world traffic scenarios, such as urban driving. Furthermore, although field tests were conducted, the testing scale was small (1.75 m × 1.20 m indoor environment), and its applicability to large-scale real-world vehicles has not been verified.

Some researchers used embedding control mechanisms directly into the physical design or morphology of the robot. The morphology control methods use the structure of the robot to minimize computational requirements and enhance task performance, although they may face limitations in adaptability and sensor precision. Pervan and Murphey presented a novel algorithmic design method to transfer task information from centralized control to the physical structure of robots, enabling task embodiment through physical morphology[104]. However, the study does not address dynamic obstacles or complex external environments, which may limit its applicability. In addition, the simulation environment is idealized, and the method's robustness in real-world physical systems remains insufficiently validated. Gan et al. explored a morphology-based approach with a bionic robot controller that integrates vision and motion planning, showing promise in dual-robot collaborations[100]. Although a solution for dynamic environments is proposed, the experimental setup is relatively idealized and does not sufficiently validate the performance in more complex and unpredictable scenarios. In addition, detailed analysis and testing of dynamic obstacles and multi-agent interaction are lacking.

Vision-based perception and advanced machine learning techniques, such as transformers, are also used to enable robust motion planning and control in complex environments. These approaches often aim to improve the robot's ability to understand and react to dynamic and uncertain environments. Behrens et al. developed an embodied reasoning system that integrates vision-based scene understanding with action planning, achieving significant success in dynamic environments but requiring further refinement for complex real-world applications[101]. Huang et al. introduced the Skill Transformer, a transformer-based policy that integrates visual and proprioceptive inputs, excelling in long-horizon tasks but challenged by computational efficiency[102]. The Skill Transformer takes as input a sequence of tokens that encode the observations over time. Each token represents its state and is made up of visual observations (depth-only), non-visual observations (joint angles and the holding state of the end effector) and positional embedding. The Skill Inference module was then used to process tokens using a causal transformer. By applying a causal mask, the transformer outputs a one-hot skill vector that represents the predicted skill to execute. As the final step, the Action Inference module utilizes inferred skill, observational data, and previous action information to determine the low-level action that the robot will execute.

4.3. Learning and reasoning

Learning and adaptation are central to the development of intelligent Embodied AI agents, enabling them to optimize their behavior through experience and adapt to new tasks, environments and situations, as shown in Figure 4.

Figure 4. Examples of embodied learning and reasoning. (A) Example of navigation and detect the object based on LLM model in unknown environments [105]; (B) The iSEE framework that can learn the environmental information when the robot travels in the environment [106]; (C) Example of reasoning and producing navigation decisions based on prior knowledge from the knowledge graph and the pre-trained model [107]. LLM: Large language model; iSEE: interpretability system for embodied agents.

Reinforcement learning has become a vital tool in embodied intelligence. It allows agents to interact with physical or simulated environments and progressively learn to handle complex conditions and tasks. A significant strength of reinforcement learning in this context is its ability to adapt and learn online. By trying out actions and observing their consequences, reinforcement learning algorithms continually refine their policies in response to changing circumstances. This adaptability is especially beneficial in dynamic or uncertain environments, where predefined rules may not be sufficient. Another key advantage of reinforcement learning is that it does not require a precise model of the environment or the agent's dynamics. Many real-world systems, such as those involving complex contact forces or multi-body interactions, are difficult to describe with accurate mathematical models. Reinforcement learning circumvents this challenge by using feedback directly from the environment to improve the agent's behavior. As a result, it can be applied to a broader range of problems where modeling is either infeasible or extremely costly. Zhao et al. introduced the embodied representation and reasoning architecture, which combines LLMs with reinforcement learning, depth, and tactile sensors to enhance long-horizon manipulation tasks[108]. Despite its success, the system is constrained by its reliance on simulated training data and hardware limitations. Liu et al. proposed a hierarchical decision framework for multi-agent embodied visual semantic navigation, which uses reinforcement learning and scene prior knowledge, along with RGB and depth sensors[109]. This framework improves collaboration and efficiency in navigation tasks, though it faces challenges related to communication bandwidth limitations and sensor accuracy.

However, reinforcement learning models provide minimal to no inherent interpretability regarding the concepts and skills they have learned or the reasoning behind their actions. Dwivedi et al. investigated the environmental information learned in the internal representations of Embodied agents trained using reinforcement learning and supervised learning[106]. The study introduces an interpretability framework to analyze the information AI agents encode about their surroundings. These include obstacle locations, target visibility, and reachable areas, which enable effective movement and task completion. In addition, one major challenge in applying reinforcement learning to real-world robotic tasks is its low sample efficiency. Most reinforcement learning algorithms learn a policy by interacting with the environment many times, which can be costly and risky in real robot systems. Each interaction involves physical motions that cause wear to the hardware and may pose safety hazards. For instance, a small mistake during training can damage robot parts or endanger nearby people. To reduce these risks, researchers commonly train agents in simulation first, where mistakes are safer and cheaper. However, this approach leads to the sim-to-real gap. Even the best simulations cannot perfectly capture every detail of the physical world, such as slight differences in friction, vibrations in the joints, or unexpected sensor noise. Because of these discrepancies, a policy that performs well in simulation may fail when transferred to a real robot.

Incorporating language and vision models has been another focus area, with the aim of improving the interaction with its environment. Dorbala et al. developed a language-guided exploration method that uses LLMs and vision-language models using RGB and depth sensors, achieving superior zero-shot object navigation performance[105]. However, this method is computationally intensive and heavily dependent on high-quality sensor data. Similarly, Sermanet et al. introduced the RoboVQA framework, which utilizes video-conditioned contrastive captioning with RGB and depth sensors for multi-modal long-horizon reasoning in robotics[110]. Although this framework significantly improves task execution, it requires high computational resources and quality sensor data. Another category includes approaches that focus on using 3D data for visual learning and navigation tasks. Zhao et al. proposed a 3D divergency policy based on real 3D point cloud data, effectively improving robotic active visual learning in novel environments[111]. However, this method introduces challenges related to handling sensor noise and the high resource demands associated with processing 3D data.

Finally, the integration of cross-modal reasoning and transformer-based architectures has been explored to enhance adaptability in complex environments. Cross-modal reasoning refers to the ability of an AI system to integrate and reason over information from multiple sensory or data modalities, such as vision, language, audio, and tactile inputs. Gao et al. introduced the cross-modal knowledge reasoning framework, which utilizes the transformer architecture along with RGB sensors and object detectors[107]. This framework effectively enhances navigation and object localization in embodied referring expression tasks. However, it is challenged by computational complexity and a heavy reliance on pre-trained models.

4.4. Interaction and collaboration

Interaction and collaboration are essential for Embodied AI systems to function effectively in environments where they must work alongside humans, other agents, or complex systems. Embodied AI agents are designed to interact with their surroundings, communicate with other agents, and collaborate with humans to achieve shared goals, as shown in Figure 5.

In the domain of real-time interaction and sensory integration, Sagar et al. proposed the BabyX platform, which employs visual and touch sensors for real-time interaction and event processing in a virtual infant model[114]. This platform achieves realistic caregiver-infant interactions, demonstrating the potential of Embodied AI in simulating complex human-like interactions. However, the platform faces challenges with sensor sensitivity and the complexity of motor execution, which can influence overall performance in dynamic scenarios. Similarly, Legrand et al. developed a variable stiffness anthropomorphic finger that uses pneumatic pressure sensors to dynamically adjust joint stiffness[115]. This innovation enables real-time adaptability and enhanced interaction stability, although it requires complex control systems and may experience delays during rapid force transitions, which presents challenges in fast-paced environments.

For cognitive modeling and perspective-taking, Fischer and Demiris introduced a computational model for visual perspective-taking[116]. This model uses simulated visual sensors and action primitives to enable efficient cognitive modeling and rapid response times, making it suitable for tasks requiring quick mental adaptations. However, its reliance on a simulated environment and task-specific optimizations limits its applicability to more generalized real-world settings, where unexpected variables and broader contexts may come into play. Focusing on human-in-the-loop learning and simulation, Long et al. proposed an interactive simulation platform designed for surgical robot learning[112]. This platform leverages haptic devices and visual sensors to enhance learning efficiency through embodied intelligence, where human input is directly integrated into the learning process. The platform achieves realistic interactions and improves the training of surgical robots, though it faces challenges related to the complexity of the simulation and its heavy dependence on expert demonstrations, which can limit scalability and broader application.

In the area of VR interfaces and interaction, Hashemian et al. developed leaning-based interfaces that use head movement sensors in head-mounted displays to improve VR locomotion and object interaction[113]. These interfaces improve spatial presence and multitasking performance in VR environments, offering a more immersive experience. However, the interfaces exhibit lower precision compared to physical walking and impose increased physical demands on users, which could impact usability and comfort during extended VR sessions.

5. PERSPECTIVE OF SYNERGIES BETWEEN DTS AND EMBODIED AI

The intersection of DTs and Embodied AI presents an unprecedented opportunity to refine intelligent systems for dynamic and uncertain environments. While DTs create real-time, high-fidelity virtual representations of the physical world, Embodied AI uses the virtual space for perception, learning, and interaction. This synergy enables embodied agents to train in digital replicas of real-world environments, reducing training costs, minimizing safety risks, and improving real-time adaptability. In this section, novel perspectives of the synergy of DTs and Embodied AI are discussed to anticipate the future development of DTs-based Embodied AI systems.

5.1. Embodied AI training based on DTs virtual space

DTs technologies play a central role by creating a real-time virtual environment. As shown in Tables 2-4, DTs are designed to mirror physical systems in real time. This real-time synchronization offers significant advantages for Embodied AI training, enabling more efficient learning, perception, and decision-making in complex environments. DTs continuously collect and process data from physical systems using sensors such as depth cameras, RGB-D sensors [75, 91], and force-torque sensors [89]. The collected real-time data are then mapped into a virtual space to ensure real-time reflection of physical states. Real-time feedback is essential for Embodied AI, as it allows AI models to adjust their decisions based on current environmental changes.

In comparison to traditional simulations, DTs maintain a continuous, real-time connection with physical systems. Although traditional simulations often create isolated virtual models that approximate real-world conditions, DTs enable seamless synchronization with the actual physical environment. The close coupling allows the embodied agent to operate within a virtual space that more accurately mirrors real-world conditions. As a result, embodied agents can develop and refine their decision-making processes based on real-time physical data, significantly reducing the sim-to-real gap. In addition, DTs emphasize real-time interactive ability, which is dynamic and often connected to the ongoing processes of physical systems. This two-way interaction means that the virtual model is continuously updated with data from the physical system, while decisions made within the virtual environment can directly influence the physical system. Finally, DTs are based on actual sensor data from physical systems, providing a more realistic and precise training environment for embodied AI. Traditional simulations often depend on predefined models that may not fully capture the complexities or variabilities of real-world scenarios. In contrast, DTs feed real-world data, such as force measurements, temperature readings, or positional data, into the virtual environment, ensuring that embodied AI is exposed to more accurate and varied conditions during its training. This data-driven approach enables embodied AI to better anticipate and respond to the unpredictable and dynamic nature of real-world situations. Table 5 summarizes the comparison of traditional simulations and DTs. DTs offer better flexibility by simulating complex environments to enable AI to train in a wide range of scenarios and improve its ability to adapt to real-world challenges.

Comparison of traditional simulations and DTs

| Aspect | Traditional simulations | DTs |

| DTs: Digital twins; AI: artificial intelligence. | ||

| Connection with physical systems | Often isolated virtual models, approximate real-world conditions | Maintains continuous, real-time synchronization with physical systems |

| Real-time feedback | Limited or no real-time feedback, usually operates on predefined scenarios | Provides real-time feedback based on sensor data, enabling dynamic decision-making |

| Data source | Typically uses predefined models or simulated data | Utilizes real-world sensor data for accurate reflection of physical states |

| Interactivity | Limited or static interaction with the system, lacks two-way communication | Two-way interaction with physical systems, virtual models directly influence physical systems |

| Flexibility in environment simulation | Limited flexibility in simulating complex, changing environments | High flexibility, easily simulates dynamic, complex environments and adapts to real-time changes |

| Application to embodied AI training | Often struggles to train AI models that adapt well to real-world environments | Enables Embodied AI to refine decision-making and adapt to dynamic real-world environments, reducing the "sim-to-real gap" |

However, using DTs as virtual training environments also faces challenges, such as data latency and excessive data transfer. To address these challenges, several practical solutions and technologies can be implemented: (1) Data Compression and Optimization: Data compression techniques can be applied to reduce the size of data before transmission while retaining essential information. This can be achieved through methods such as principal component analysis (PCA) for dimensionality reduction [117], neural network-based compression techniques such as auto-encoders to extract compact data representations [118], and video or image compression using efficient formats; (2) Decentralized Architectures: Distributing data processing and storage across multiple nodes is an effective way to avoid bottlenecks in centralized systems. This can be implemented by using Apache Kafka [119] for real-time data streaming and communication between distributed nodes and employing distributed databases such as MongoDB or Cassandra [120] to ensure scalable and efficient data storage; (3) Hybrid and Multi-Cloud Architectures: Adopting hybrid and multi-cloud architectures can provide flexible and scalable solutions to manage data processing and storage efficiently. Multi-cloud architectures allow organizations to distribute workloads across different cloud providers, reducing dependency on a single platform and improving system resilience. Implementing container orchestration tools such as Kubernetes ensures seamless integration between cloud environments, while data transfer optimization tools such as AWS DataSync or Google Transfer Appliance streamline data movement [121]; (4) 5G/6G communication network: Using advanced network technologies such as 5G and emerging 6G standards can provide high-bandwidth, low-latency communication, enabling real-time data transmission and reducing delays in critical operations [122]. These networks are particularly effective for supporting massive IoT deployments and high-speed data exchange in dynamic environments [123].

5.2. Embodied AI with human DTs

As shown in Sections 3.3 and 4.4, DTs and Embodied AI are widely applied in HRC. However, only a few studies consider the human aspect or merely treat humans as simple obstacles. If human behavior and status become more predictable, robots can provide more efficient strategies for human operators and generate data-informed decisions to assist human operators. However, modeling and simulation of human status and behaviors is difficult in HRC. Firstly, compared to robotic systems, human modeling requires a larger dataset, covering more complex geometric configurations, the acquisition of biological signals, and significant variations among different individuals. Secondly, human behavior modeling demands higher real-time performance due to the dynamic and unpredictable nature of human actions. This involves processing a continuous stream of data from multiple sensors to accurately track and respond to human movements and states. In addition, human performance and behavior are also subject to subjective influences, such as psychological stress and fatigue levels, which can significantly affect both physical and cognitive functions.

The development prospects of human digital twins (HDTs) are promising, particularly in enhancing HRC [124]. The HDTs hold significant potential for improving the interaction between embodied agents and humans [125]. Despite ongoing debates on the definition of HDTs, it is generally agreed that HDTs are aimed at collecting comprehensive information to create a virtual digital human [126]. As shown in Figure 6, HDTs collect both physical and mental data from humans, allowing AI to adjust its behavior in real time during virtual training. Instead of treating humans as simple agents, HDTs enable AI to recognize and adapt to the physical and mental states of humans, such as fatigue, stress, or movement patterns. This adaptive capability is crucial in building human-centric systems, where AI can respond more intelligently and sensitively to human needs and conditions.

Figure 6. Common types of information collected include: (A) physiological data refers to the biological information that reflects the functions and processes of the human body, such as heart rate, blood pressure, body temperature, respiratory rate, and ECG readings; (B) mental data refers to information related to an individual's emotional states, encompassing aspects of mental health, mood, and psychological well-being; (C) ability data refers to information that captures an individual's capabilities, reflecting how well a person can execute certain functions, which can be physical, such as motor skills, or cognitive, such as problem-solving abilities; (D) cognitive data refers to information related to an individual's reason processes involved in acquiring knowledge, problem-solving, memory, attention, and decision-making; and (E) action data refers to information that captures an individual's physical movements, behaviors, or actions in response to stimuli or within a specific environment. ECG: Electrocardiogram.

In addition, HDTs have widely potential applications in the field of human-robot interaction. They can support real-time monitoring of human body state within human-robot interactions. Through the use of AI technologies, the robotic system simultaneously tracks 3D posture, action intention, and ergonomic risk, which is a more holistic view of the human operator than traditional methods [127]. Beyond the body information, HDTs can continuously monitor human fatigue state during human-robot collaborative assembly. They can assess worker real-time fatigue in ongoing tasks to reallocate tasks between humans and robots to reduce physical fatigue, thus optimizing the overall HRC in manufacturing [128]. Moreover, training robots to interact with humans in real time can carry inherent risks, especially in scenarios that require immediate responses, such as emergency interventions or collaborative tasks. By simulating human responses and conditions within a virtual space, HDTs allow robots to learn without posing a direct threat to human safety. This approach ensures that robots can develop the necessary skills and reaction times without the risk of causing physical harm during the training process [91].

The future of HDTs in practical applications of Embodied AI holds significant promise. HDTs can enable embodied agents to work more effectively in dynamic and human-centric environments by integrating real-time data on human physical and mental states. For example, in healthcare, HDTs can simulate patient conditions, allowing embodied agents to provide personalized care, such as monitoring fatigue or stress levels and adapting their actions to meet individual needs [129]. In manufacturing, HDTs can help improve worker safety and efficiency by analyzing operator movements and suggesting optimized workflows to reduce strain or prevent injuries [130]. Similarly, in autonomous systems, HDTs can create realistic simulations of human behavior, enabling embodied agents to predict and respond to human actions more intelligently during tasks such as emergency evacuations or collaborative work [131]. These advances can lead to safer and more efficient interactions between humans and embodied agents, supporting a wide range of industries that require seamless and adaptive collaboration.

However, constructing HDTs is highly challenging, particularly when considering the complexities of human behavior and environmental interactions in large-scale implementations. To address this challenge with real-time adaptability, the following technologies can be applied: (1) Multi-modal Data Collection and Fusion: Use biosensors [e.g., electroencephalography (EEG), electrocardiogram (ECG)], environmental sensors (e.g., temperature, humidity, gas sensors), and visual sensors (e.g., RGB cameras, depth sensors) [132]. Employ data fusion methods such as Kalman filters, Bayesian networks, or DL to integrate multiple data streams to create a comprehensive and accurate representation of human and environmental states; (2) Real-Time Data Processing: Implement edge computing using devices such as NVIDIA Jetson [133] or Intel Movidius [134] for local data processing to reduce latency. Using real-time data streaming frameworks such as Apache Kafka or Apache Flink to ensure fast responses to dynamic environmental changes; (3) Dynamic Modeling and Learning: Use dynamic neural networks such as graph neural networks (GNNs) or recurrent neural networks (RNNs) to model complex relationships in dynamic environments. Apply reinforcement learning algorithms to optimize adaptation and decision-making; (4) DTs Simulation Platforms: Leverage simulation tools such as NVIDIA Isaac Sim, Unity, or Gazebo to create realistic virtual environments. Integrate data-driven modeling using Python or MATLAB to update simulations with real-time input. These technologies work together to enable HDT systems to operate effectively in complex dynamic environments while ensuring reliability, scalability, and ethical compliance.

5.3. Embodied AI in large-scale virtual environments

Currently, most Embodied AI systems are limited to small, indoor environments due to the substantial time and economic costs associated with scaling up to larger, more complex settings such as entire cities. The development prospects of environmental digital twins (EDTs) are especially in advancing AI applications beyond confined indoor environments. EDTs focus on creating digital representations of environments, such as urban areas, providing a comprehensive and dynamic virtual model of complex settings, as shown in Figure 7.

Figure 7. Example of EDTs. DTs technology in both urban and agricultural contexts offers significant advantages by enabling data-driven, real-time simulations. These applications not only optimize resource allocation but also reduce environmental risks, contributing to the sustainable development of cities and agricultural systems. EDTs: Environmental digital twins; DTs: digital twins.

Urban digital twins (UDTs) are increasingly utilized in smart city applications to create highly detailed virtual models of urban environments [135]. UDTs replicate various aspects of a city, such as infrastructure, traffic patterns, and even environmental factors such as air quality and energy consumption. For Embodied AI, UDTs offer a controlled yet expansive environment for training AI systems designed for tasks such as autonomous driving, public safety monitoring, and traffic management [136]. By allowing Embodied agents to navigate and interact with complex city environments, UDTs help train Embodied AI models at a large scale with effective operation in real-world conditions. Moreover, training AI in virtual smart city environments can significantly reduce the costs and risks associated with real-world experimentation. Since these DTs provide highly accurate simulations, AI can be trained to respond to traffic incidents, public emergencies, or urban planning scenarios without causing harm to the physical environment or human users [137].

DTs in agriculture are transforming the modern farming industry. They create virtual representations of agricultural fields, crops, and farming machinery [138]. For future Embodied AI systems involved in precision agriculture, such as autonomous tractors, drones, or robotic harvesters, DTs allow for safe and cost-effective training in large-scale farming environments. These virtual environments enable Embodied AI to learn how to handle tasks such as soil monitoring, crop management, and resource optimization without the risk of damaging valuable equipment or harming the environment. Using DTs reduces the need for expensive real-world trials, as Embodied AI systems can be fully trained in virtual environments before being deployed in actual fields. In addition, DTs allow for continuous optimization by simulating a range of environmental conditions, such as varying weather patterns or soil types, helping AI adapt to a wide range of agricultural scenarios [40].

6. CONCLUSIONS

This paper provides a comprehensive review of DTs and Embodied AI, including the foundational principles of DTs and Embodied AI, their ability to synchronize with physical systems in real time, and their applications in automation, HRC, and large-scale environments. In addition, this paper provides a novel perspective on the potential integration of DTs and Embodied AI in future developments. The integration of DTs and Embodied AI offers a transformative pathway for advancing the capabilities of AI across various domains. DTs provide highly accurate, real-time virtual replicas of physical systems, enabling AI to train, adapt, and perform in environments that closely mirror real-world conditions. This synergy significantly improves AI decision-making, perception, and adaptability, whether in human-centric applications, large-scale urban or agricultural environments, or real-time industrial settings. By leveraging the continuous synchronization between physical and virtual systems, DTs reduce the "sim-to-real gap" and offer more dynamic, interactive, and cost-effective solutions for AI development. The combination of DTs and Embodied AI paves the way for safer, more efficient, and scalable intelligent systems.

Future work in this field should consider the development of training and learning algorithms that use DTs for system and application optimization. While current DTs research mainly focuses on the simulation aspect, it often overlooks the critical potential of DTs to enhance system-level and application-specific performance. This area requires integrating real-time data-driven insights from DTs into learning processes. Another critical direction for future work is to address the limitations associated with the precision of data, the responsiveness in real time, and the high computational demands of DTs in large-scale applications. These challenges are often underexplored, yet they significantly impact the scalability and effectiveness of DTs in practical scenarios. Research should focus on developing techniques to enhance the fidelity of data, optimize computational efficiency, and improve the real-time capabilities of DTs, particularly in complex environments.

DECLARATIONS

Authors' contributions

Made substantial contributions to the research and investigation process, reviewed and summarized the literature, wrote and edited the original draft: Li, L.; Yang, S. X.

Made substantial contributions to review and summarize the literature: Li, L.

Performed oversight and leadership responsibility for the research activity planning and execution, developed ideas and provided critical review, commentary, and revision: Yang, S. X.

Availability of data and materials

Not applicable.

Financial support and sponsorship

This work was supported by the Natural Sciences and Engineering Research Council (NSERC) of Canada.

Conflicts of interest

Li, J. is a Junior Editorial Board Member of the journal Intelligence & Robotics, and Yang, S. X. is the Editor-in-Chief of the journal Intelligence & Robotics. Li, J. and Yang, S. X. were not involved in any steps of editorial processing, notably including reviewers' selection, manuscript handling and decision making.

Ethical approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Copyright

© The Author(s) 2025.

REFERENCES

1. Gupta, A.; Savarese, S.; Ganguli, S.; Fei-Fei, L. Embodied intelligence via learning and evolution. Nat. Commun. 2021, 12, 5721.

2. Nygaard, T. F.; Martin, C. P.; Torresen, J.; Glette, K.; Howard, D. Real-world embodied AI through a morphologically adaptive quadruped robot. Nat. Mach. Intell. 2021, 3, 410-9.

3. Li, C.; Zhang, R.; Wong, J.; et al. BEHAVIOR-1K: a benchmark for embodied AI with 1, 000 everyday activities and realistic simulation. In: Proceedings of The 6th Conference on Robot Learning, PMLR; 2023. pp. 80–93. https://proceedings.mlr.press/v205/li23a.html. (accessed 2025-02-28).

4. Driess, D.; Xia, F.; Sajjadi, M. S.; et al. PaLM-E: an embodied multimodal language model. arXiv2023, arXiv: 2303.03378. Available online: https://doi.org/10.48550/arXiv.2303.03378. (accessed on 28 Feb 2025).

5. Duan, J.; Yu, S.; Tan, H. L.; Zhu, H.; Tan, C. A survey of embodied AI: from simulators to research tasks. IEEE. Trans. Emerg. Top. Comput. Intell. 2022, 6, 230-44.