Digital twins as a unifying framework for surgical data science: the enabling role of geometric scene understanding

Abstract

Surgical data science is devoted to enhancing the quality, safety, and efficacy of interventional healthcare. While the use of powerful machine learning algorithms is becoming the standard approach for surgical data science, the underlying end-to-end task models directly infer high-level concepts (e.g., surgical phase or skill) from low-level observations (e.g., endoscopic video). This end-to-end nature of contemporary approaches makes the models vulnerable to non-causal relationships in the data and requires the re-development of all components if new surgical data science tasks are to be solved. The digital twin (DT) paradigm, an approach to building and maintaining computational representations of real-world scenarios, offers a framework for separating low-level processing from high-level inference. In surgical data science, the DT paradigm would allow for the development of generalist surgical data science approaches on top of the universal DT representation, deferring DT model building to low-level computer vision algorithms. In this latter effort of DT model creation, geometric scene understanding plays a central role in building and updating the digital model. In this work, we visit existing geometric representations, geometric scene understanding tasks, and successful applications for building primitive DT frameworks. Although the development of advanced methods is still hindered in surgical data science by the lack of annotations, the complexity and limited observability of the scene, emerging works on synthetic data generation, sim-to-real generalization, and foundation models offer new directions for overcoming these challenges and advancing the DT paradigm.

Keywords

INTRODUCTION

Surgical data science is an emerging interdisciplinary research domain that has the potential to transform the future of surgery. Capitalizing on the pre- and intraoperative surgical data, the research efforts in surgical data science are dedicated to enhancing the quality, safety, and efficacy of interventional healthcare[1]. With the advent of powerful machine learning algorithms for surgical image and video analysis, surgical data science has witnessed a significant thrust, enabling solutions for problems that were once considered exceptionally difficult. These advances include improvements in low-level vision tasks, such as surgical instrument segmentation or “critical view of safety” classification[2], to high-level and downstream challenges, such as intraoperative guidance[3-7], intelligent assistance systems[8-10], surgical phase recognition[11-18], gesture classification[14,19-21], and skills analysis[20,22,23]. While end-to-end deep learning models have been the backbone of recent advancements in surgical data science, the high-level surgical analysis derived from these models raises concerns about reliability due to the lack of interpretability and explainability. Alternate to these end-to-end approaches, the emerging digital twin (DT) paradigm, a virtual equivalent of the real world, allows interpretable high-level surgical scene analysis on enriched digital data generated from low-level tasks.

End-to-end deep learning models have been the standard approach to surgical data science in both low- and high-level tasks. These models either focus on specific tasks or are used as foundational models, solving multiple downstream tasks. This somewhat straightforward approach, inspired by deep learning best practices, has historically excelled in task-specific performances due to deep learning’s powerful representation learning capabilities. However, we argue that this approach - despite its recent successes - is ripe for innovation[1,24,25]. End-to-end deep learning models exhibit strong tendencies to learn, exploit, or give in to non-causal relationships, or shortcuts, in the data[26-28]. Because it is impossible at worst or very difficult at best to distinguish low-level vision from high-level surgical data science components in end-to-end deep neural networks, it generally remains unclear how reliable these solutions are under various domain shifts and whether they associate the correct input signals with the resulting prediction[29]. These uncertainties and unreliability hinder further development in the surgical data science domain and the clinical translation of the current achievements[1]. While explainable machine learning, among other techniques, seeks to develop methods that may assert adequate model behavior[30], by and large, this limitation poses a challenge that we believe is not easily remedied with explanation-like constructs of similarly end-to-end deep learning origin.

The DT paradigm offers an alternative to task-specific end-to-end machine learning-based approaches for current surgical data science research. It provides a clear framework to separate low-level processing from high-level analysis. As a virtual equivalent of the real environment (surgical field in surgical data science), the DT models the real-world dynamics and properties using the data obtained from sensor-rich environments through low-level processing[7,31-33]. The resulting DT is ready for high-level complex analysis since all relevant quantities are known precisely and in a computationally accessible form. Unlike end-to-end deep learning paradigms that rely on data fitting, the DT paradigm employs data to construct a DT model. While the low-level processing algorithms that enable the DT are not immune to non-causal learning and environmental influences during the machine learning process, which might compromise robustness or performance, their impact is mostly confined to the accuracy of digital model construction and update. The resulting digital model in the DT paradigm can provide not only visual guidance like mixed reality but also, more importantly, a platform for more comprehensive surgical data science research like data generation, high-level surgical analysis (e.g., surgical phase recognition, and skill assessment), and autonomous agent training. DT’s uniform representation of causal factors, including geometric and physical attributes of the subjects and tools, surgery-related prior knowledge, and user input, along with their clear causal relation with the surgical task, should ensure superior generalizability and interpretability of surgical data science research.

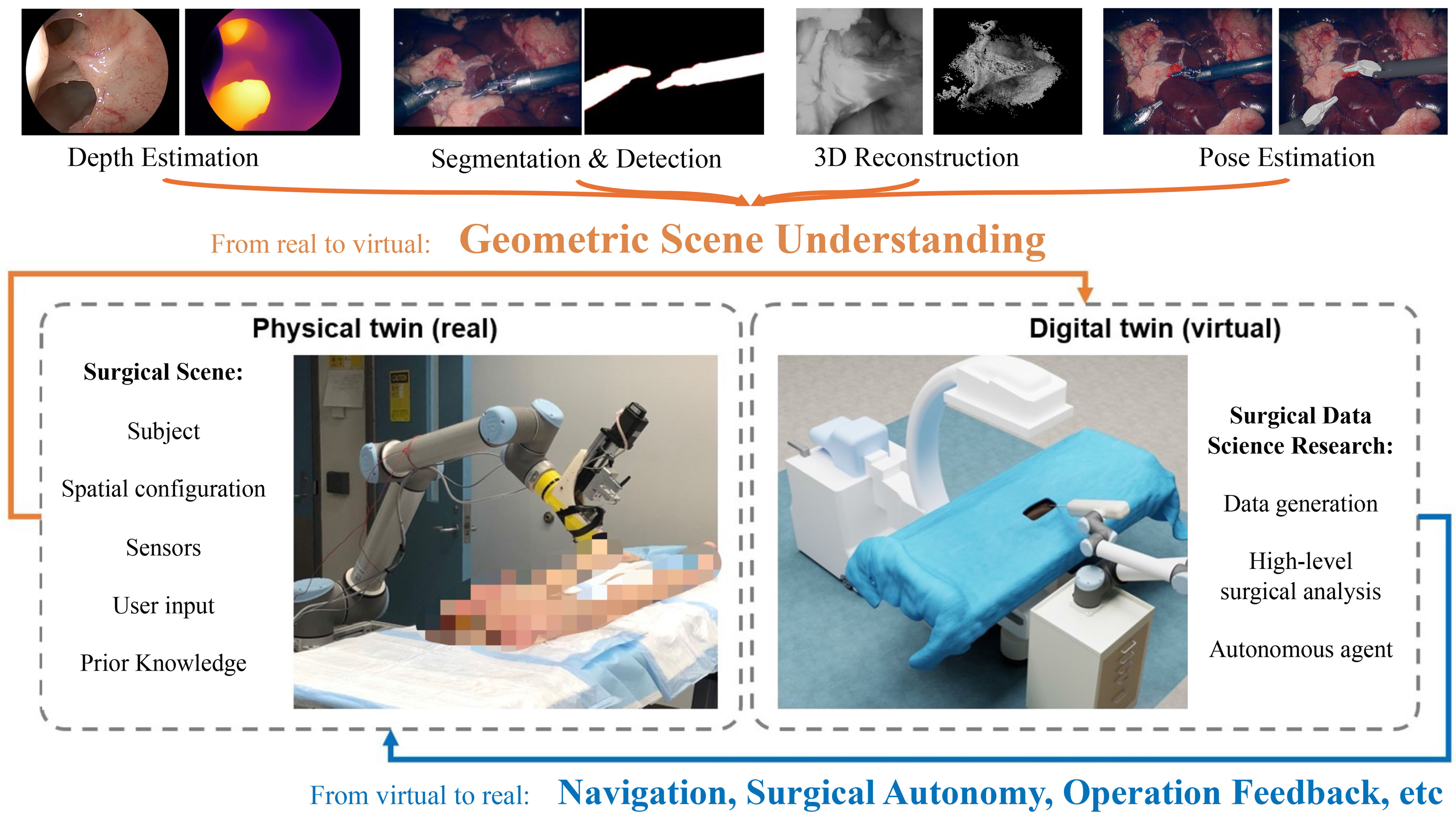

The fundamental component of the DT paradigm is the building and updating of the digital model from real-world observations. In this process, geometric information processing plays a central role in the representation, visualization, and interaction of the digital model. Thus, the geometric scene understanding (i.e., perceiving geometric information from the target scene) is vital to enabling the realization of the DT and further DT-based research in surgical data science. In this work, we focus on reviewing the geometric representations, geometric scene understanding techniques, and their successful application for building primitive DT frameworks. The design of geometric representations needs to consider the trade-off among accuracy, representation ability, complexity, interactivity, and interpretability, as they correspond to the accuracy, applicability, efficiency, interactivity, and reliability of the DT framework. The extraction and encoding of different target representations and their status in the digital world lead to the establishment and development of various geometric scene understanding tasks, including segmentation, detection, depth estimation, 3D reconstruction, and pose estimation [Figure 1]. Although various sensors in a surgical setup, such as optical trackers, depth sensors, and robotics meters, are important for geometric understanding and DT instantiation in some procedures[7], we focus on reviewing methods based on visible light imaging, as it is the primary real-time observation source in most surgeries, especially in minimally invasive surgeries (MIS) due to their limited operational space. We further select methods that achieve superior benchmark performance for geometric scene understanding, which can be applied for accurate DT construction and updating. The integration of geometric scene understanding within the DT framework has led to successful applications, including simulator-empowered DT models and procedure-specific DT models.

Figure 1. The enabling role of geometric scene understanding for digital twin. All figures in the article are generated solely from the authors’ own resources, without any external references.

The paper is organized as follows: Section “GEOMETRIC REPRESENTATIONS” provides an overview of the existing digital representations for geometric understanding. Section “GEOMETRIC SCENE UNDERSTANDING TASKS” investigates existing datasets and various algorithms used to extract geometric understanding, assessing their effectiveness and limitations in terms of benchmark performance. Section “APPLICATIONS OF GEOMETRIC SCENE UNDERSTANDING EMPOWERED DIGITAL TWINS” explores the successful attempts to apply geometric scene understanding techniques in DT. Concluding the paper, Section “DISCUSSION” offers an in-depth discussion on the present landscape, challenges, and future directions in the field of geometric understanding within surgical data science.

GEOMETRIC REPRESENTATIONS

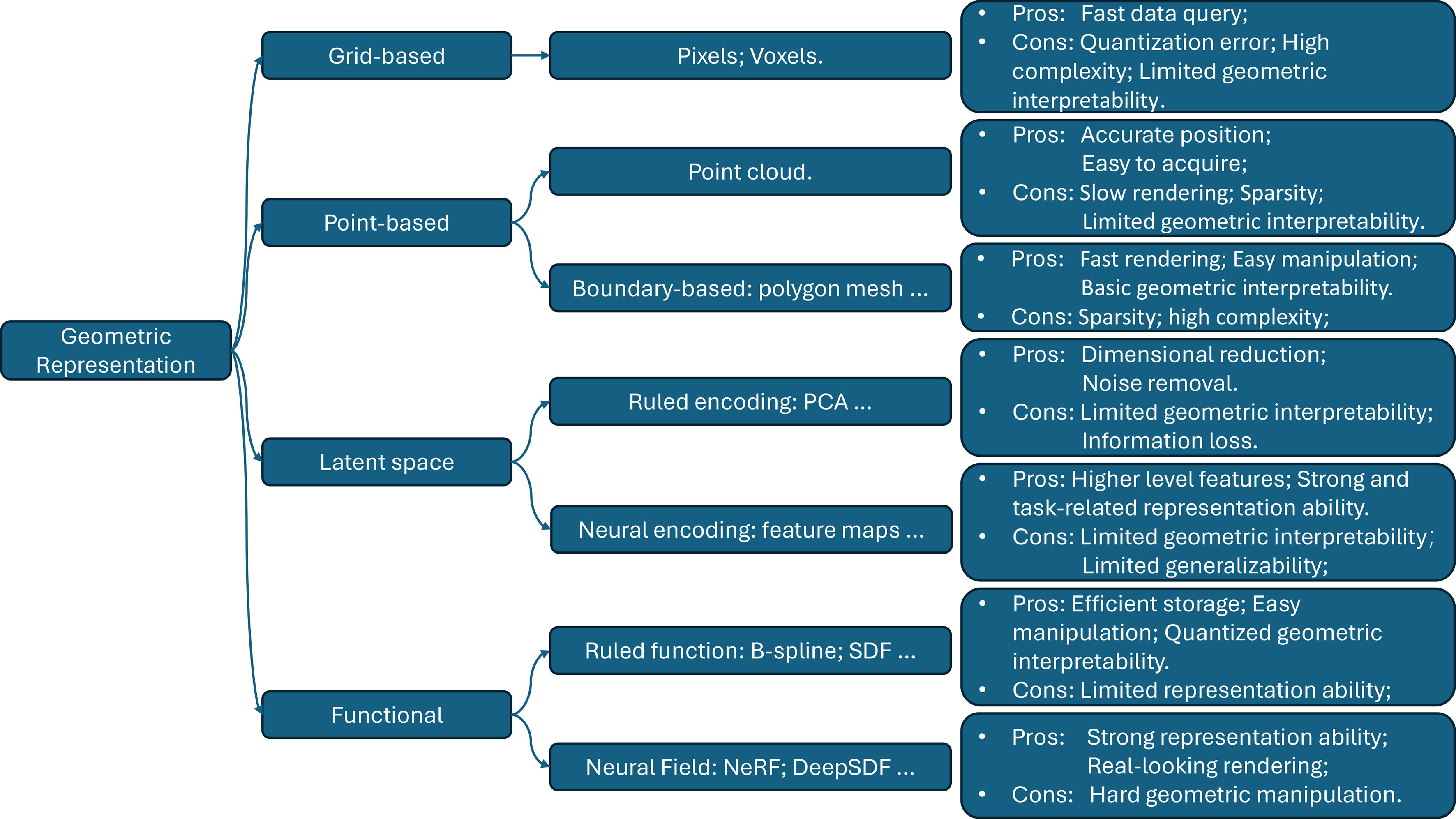

This section introduces various geometric representation categories and analyzes their advantages and disadvantages in relation to DT requirements. The taxonomy, along with some examples and summarized takeaways, is shown in Figure 2. We first present direct grid-based and point-based representations. Then, we discuss latent space representation generated via ruled encoding or neural encoding on previous representations or other modalities. Finally, we cover functional representations, which are generated through meticulous mathematical derivation or estimation based on observation.

Figure 2. An overview of geometric representations. PCA: Principal component analysis; SDF: signed distance function.

Grid-based representation

The grid-based representation divides the 2D/3D space into discrete cells and usually stores it as multidimensional arrays. Each cell holds values for various attributes, including density, color, semantic classes, and others. For example, the segmentation masks represent shapes of interest in rectangular space uniformly divided into a 2D grid of cells, where each cell is called a pixel. Some 3D shapes are also stored in uniformly divided 3D grids of cells. These cells are called voxels and usually hold the density/occupancy value. While these simplified representations enable efficient data queries for specific locations, they come with a trade-off between the error introduced by quantization and the computation and memory complexity[34]. The high computation and storage complexity hinders scaling up the accuracy and range of the representation. More importantly, there is an obvious gap between discrete representations and humans’ intuition of expressing geometry from a semantic level[34]. Humans perceive geometry in its entirety or connection between parts, whereas grid-based discrete representation represents geometry mostly by the spatial aggregation of the cells without explicit relations among them. Grid-based representations are suited well for tasks that focus on individual cell values/patterns in a group of cells. However, the lack of explicit relations among cells limits their geometric interpretability and their use in high-level processing tasks such as rigid/deformable surface representation and reconstruction.

Point-based representation

Instead of uniformly sampling the space, point-based representation samples key points represented in the Cartesian coordinate system.

Point cloud

A point cloud is a discrete set of data points in the 3D space[35]. It is the most basic point-based representation that can accurately represent the absolute position in an infinite space for each point. However, the sparsity of the points limits the accuracy of the representation at the object level. While it is easy to acquire from sensors, the lack of explicit relationships between points makes it slow to render. Similar to grid-based representation, the geometric interpretability of point clouds is limited due to the lack of relationships between points.

Boundaries

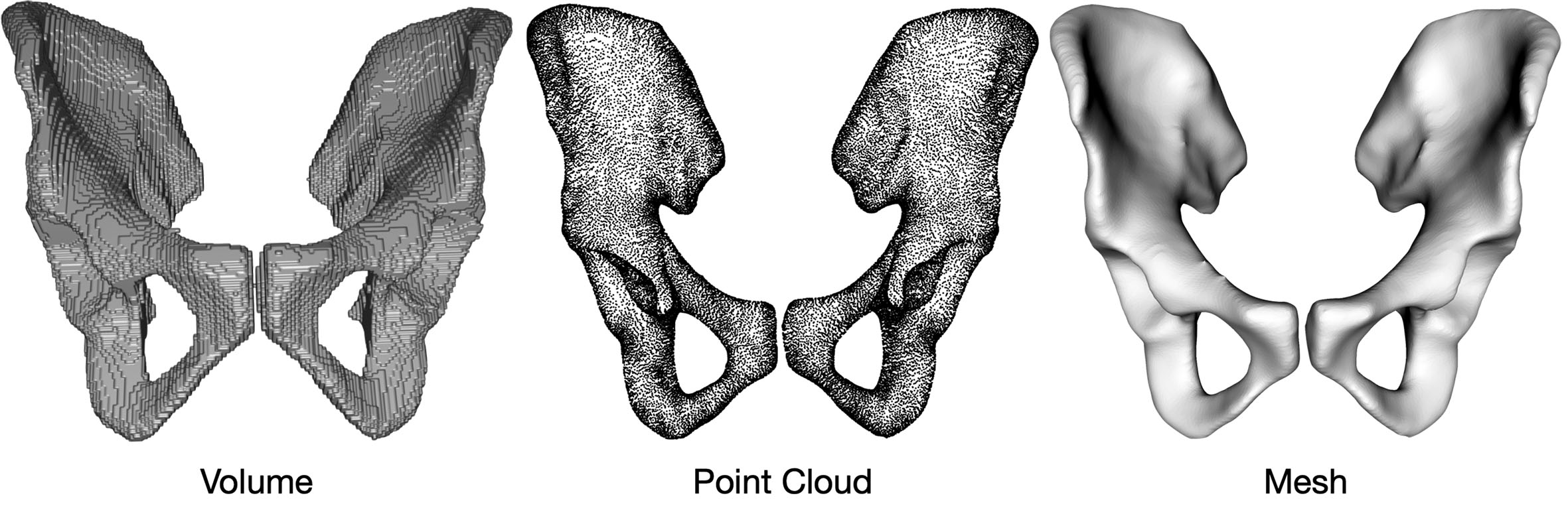

Boundaries are an alternate geometric representation method that introduces explicit relationships between points. An example in 3D space is the polygon mesh[36]. It is a collection of vertices, edges, and faces that define the shape of a polyhedral object. This type of representation is usually stored and processed as a graph structure. Boundary-based representations offer the flexibility to represent complex shapes and are widely used for 3D modeling. Compared to the point cloud, boundary-based representation offers faster rendering speed and more control over appearance[36]. A visual comparison is provided in Figure 3. However, it shares the same limitation as point clouds due to the sparsity of the points. Additionally, boundary-based representations have higher complexity and memory usage, as the potential connections increase quadratically as a function of the number of points. For high-level processing, the relation among points and explicit boundary representation provides basic geometric interpretability.

Latent space representation

Latent space representation is encoded from the original representation, such as point clouds, 2D images, or 3D volume, for dimension reduction or feature processing and aggregation. We divide the latent space representation into two subcategories based on the encoding methods.

Ruled encoding

Ruled encoding employs handcrafted procedures to encode the data to the latent space. One example is the principal component analysis (PCA)[37]. PCA linearly encodes the data to new coordinates according to the deviation of all samples. For geometric understanding, PCA can be used to extract the principal component as a model template for a specific object, e.g., face[38,39], and express the object as a linear combination of these templates. The latent representation reduces feature dimensionality and also removes noise[40]. However, the encoding is usually hard to interpret in geometric aspects and the projection and dimensional reduction usually come with information loss.

Neural encoding

Neural encoding extracts features via neural network architectures. The encoding is learned from specific data distributions with specific geometric signals or geometry-related tasks. Neural networks’ ability to extract higher-level features enhances the representation ability[41]. This makes neural encoding-based latent space representation work well when the downstream tasks are highly correlated to the pre-training proxy tasks and the application domain is similar to the training domain. However, when these conditions are not satisfied, the poor generalizability of neural networks[42] makes this type of representation less informative for downstream tasks. This limitation is also difficult to overcome due to the lack of geometric interpretability.

Functional representation

Functional representation captures geometric information using geometric constraints typically expressed as functions of the coordinates, specifying continuous sets of points/regions. We divide the functional representation into two subcategories based on the formation of the function.

Ruled functions

Ruled functions can be expressed in parametric ways or implicit ways. Parametric functions explicitly represent the point coordinates as a function of variables. Implicit functions take the point coordinates as input[43]. A signed distance function (SDF) f of spatial points x maps x to its orthogonal distance to the boundary of the represented object, where the sign is determined by whether or not x is in the interior of the object. The surface of the object is implicitly represented by the set {x | f(x) = 0}. Level sets represent geometric understanding by a function of coordinates. The value of the function is expressed as a level. The set of points that generate the same output value is a level set. A level set can be used to represent curves or surfaces. For primitive shapes like ellipses or rectangles, the ruled functional representation provides perfect accuracy, efficient storage, predictable manipulation, and quantized interpretability for some geometric properties. Thus, this representation form is commonly used in human-designed objects and simulations. However, for natural objects, although effort has been made to represent curves and surfaces using polynomials, as shown in the development of the Bezier/Berstein and B-spline theory[44], accurate parametric representations are still challenging to design.

Neural fields

Neural fields use the neural network’s universal approximation property[45] to approximate traditional functions to represent geometric information. For example, neural versions of level-set[46] and SDF[47] are proposed. Neural fields map the spatial points (and orientation) to specific attributes like colors (radiance field)[48] and signed orthogonal distances to the surface (SDF)[49]. Unlike latent feature representation, where the intermediate feature map extracted by the neural networks represents the geometric information, the Neural fields encode the geometric information into the networks’ parameters. Compared to the traditional parametric representation, the universal approximation ability[45] of neural networks enables the representation of complex geometric shapes and discontinuities learned from observations. The interpretability of the geometric information from the neural function depends on the function it approximates. For example, NeRF[48] lacks geometric interpretability as it models occupancy, whereas Neuralangelo[49] offers better geometric interpretability as it models SDF. However, since the network is a black box, geometric manipulation is not as direct as in ruled functional representations.

GEOMETRIC SCENE UNDERSTANDING TASKS

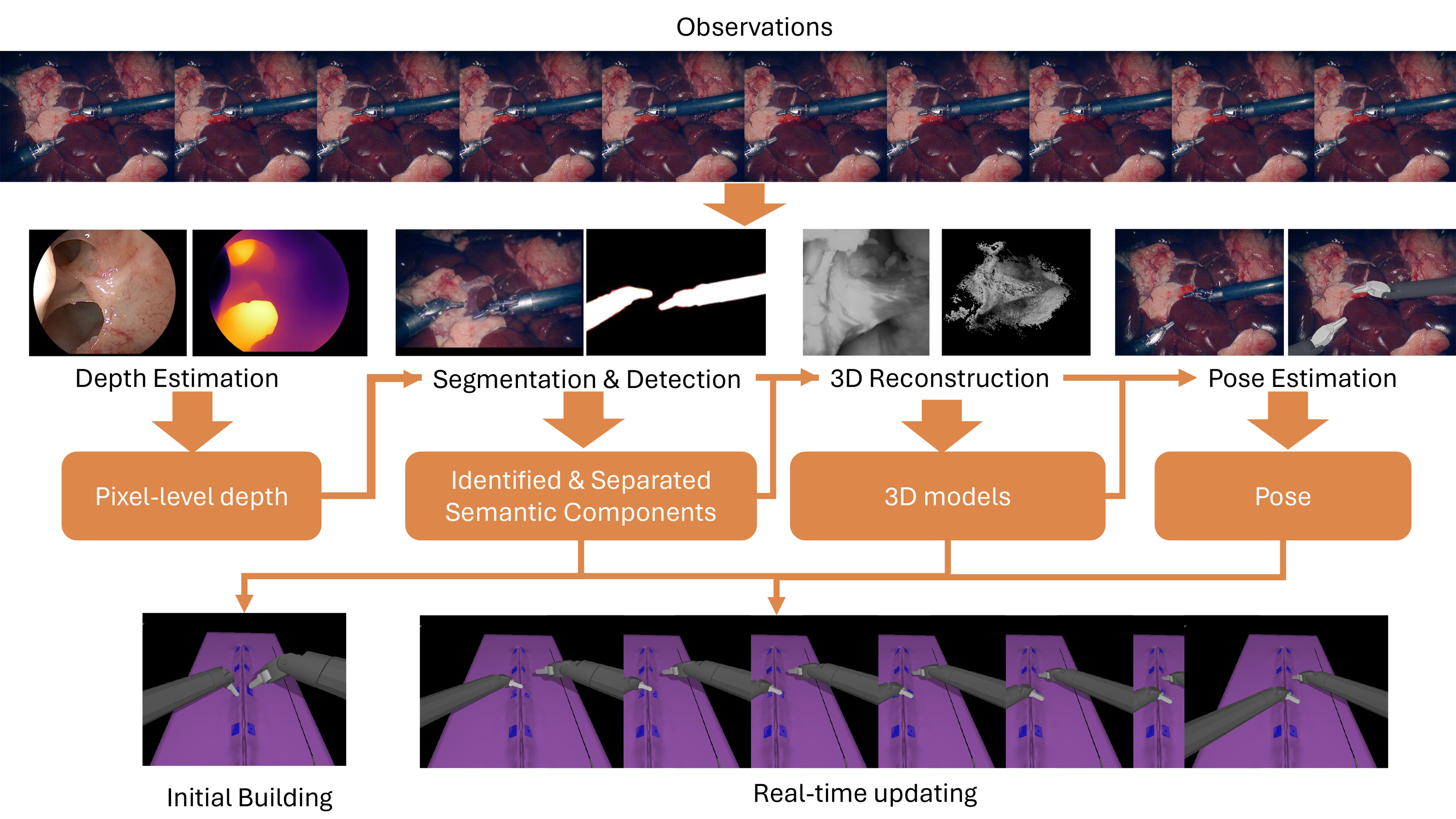

In this section, we visit and analyze some existing geometric scene understanding tasks and corresponding datasets and methods that are the building blocks of the DT framework. The illustration of tasks’ functionality and relative relation in the building and updating of DT is shown in Figure 4. We mainly introduce four large categories - segmentation and detection, depth estimation, 3D reconstruction, and pose estimation. Segmentation and detection in both static images and videos focus on identifying and isolating the target and generating decomposed semantic components from the entire scene. This procedure is the prerequisite for building and consistently updating the DT. While depth estimation extracts pixel-level depth information of the entire scene from static images, the generated depth map is limited in accuracy and representation ability due to its grid-based representation. Thus, it is not suitable as the only source for building and updating the digital twin and is often employed together with segmentation/detection methods and 3D reconstruction methods. Based on the identified targets, 3D reconstruction methods take multiple observations and extract more accurate and detailed geometric information to form 3D models for all components. Pose estimation tasks align the existing 3D models and their identification with the observations. While all techniques significantly contribute to building and updating the digital model, the building of the initial digital model mostly relies on segmentation, detection, and 3D reconstruction, adopting a “identify then reconstruct” principle. Once the digital model is initialized, the real-time updating of semantic components in the digital scene relies more on pose estimation. We first visit and summarize the availability of related data and materials in one subsection and then visit and analyze the techniques in the following subsections in the order of segmentation and detection, depth estimation, 3D reconstruction, and pose estimation.

Figure 4. An overview of geometric scene understanding tasks and corresponding impact in the building and updating of DT. All figures in the article are generated solely from the authors’ own resources, without any external references. DT: Digital twin.

Availability of data and materials

Segmentation and detection

Segmentation and detection, as fundamental tasks in surgical data science, receive an enormous amount of attention from the community. Challenges are being proposed with the corresponding dataset after the success of EndoVis challenges[50,51]. EndoVis[50,51] collected data from abdominal porcine procedures with the da Vinci surgical robot and manually annotated the surgical instruments and some tissue anatomy for segmentation. SAR-RARP50[52] and CATARACTS[53] challenge released in-vivo datasets for semantic segmentation in real procedures. SurgT[54] and STIR[55] provide annotations for tissue tracking. RobustMIS challenge[56] divided test data into three categories - same procedures as training, same surgery but different procedures, and different but similar surgery. With the three levels of similarity, the challenge aimed to assess algorithms’ robustness against domain shift. SegSTRONG-C[57] collected ex vivo data with manually added noise like smoke, blood, and low brightness to assess models’ robustness against non-adversarial corruptions unseen from the training data. Besides challenges, researchers also collect data to support algorithm development[58] and publications[59-61].

Although various datasets are available for segmentation and detection, due to the complexity of the segmentation and detection annotation, the scales (< 10k) of those datasets are not as large as the general vision data (MS COCO[62] 328k images, Object365[63], 600k images). Thus, SurgToolLoc[64] challenge provided tool presence annotation as weak labels in 24,695 video clips for machine learning models to be trained to detect and localize tools in video frames with bounding boxes. Lap. I2I Translation[65] attempted to generate a larger-scale dataset using an image-to-image translation approach from synthetic data. SegSTRONG-C[57] provided a foundation model-assisted[66] annotation protocol to expedite the annotation process. Despite the effort, the demand for a large-scale and uniform dataset exists desperately.

Depth estimation

Because of the difficulty in obtaining reliable ground truth, there are comparatively fewer surgical video datasets available for dense depth estimation. The EndoSLAM dataset consists of ex vivo and synthetic endoscopic video, with depth and camera pose information provided for each sample[67]. As a part of the Endoscopic Vision Challenge, the SCARED dataset includes porcine endoscopic video with ground truth obtained via structured light projection[68]. The Hamlyn Center dataset consists of in vivo laparoscopic and endoscopic videos, which are annotated in refs[69,70] with dense depth estimation using a pseudo-labeling strategy, resulting in the rectified Endo-Depth-and-Motion dataset (referred to as “Rectified Hamlyn”). Other smaller-scale datasets include the JHU Nasal Cavity dataset originally used for self-supervised monocular depth estimation[41], the Arthronet dataset[71], and two colonoscopy datasets with associated ground truth[72,73].

3D reconstruction

3D reconstruction relies on the correspondence among the observations of the same object/scene. Thus, unlike the datasets of other tasks where the image frames are always paired with ground truth annotations or weak annotations, any surgical video with adequate views of the target scene/objects can be used for 3D reconstruction. However, due to the limited observability in the surgical scenario, datasets with depth annotation[41,67,69,70,73] mentioned above or datasets containing stereo videos[57,74,75] are preferred for the 3D reconstruction research to overcome the ambiguity. Some datasets[67,73] also contain the ground-truth 3D model for the target anatomy for quantitative evaluation. Besides datasets already introduced in previous tasks, the JIGSAW[75] dataset, originally collected for surgical skill assessment, can be used for the reconstruction of surgical training scenarios.

Pose estimation

Pose estimation in laparoscopic surgery is important for accurate tool tracking and manipulation. SurgRIPE[76], part of the Endoscopic Vision Challenge 2023, addresses marker-less 6DoF pose estimation for surgical instruments under and without occlusion. The Laparoscopic Non-Robotic Dataset[77] focuses on simultaneous tool detection, segmentation, and geometric primitive extraction in laparoscopic non-robotic surgery. It includes tool presence, segmentation masks, and geometric primitives, supporting comprehensive tool detection and pose estimation tasks. Datasets capturing surgical tools in realistic conditions are essential for accurate pose estimation. The 3D Surgical Tools (3dStool) dataset[78] was constructed to include RGB images of surgical tools in action alongside their 3D poses. Four surgical tools were chosen: a scalpel, scissors, forceps, and an electric burr. The tools were recorded operating on a cadaveric knee to accurately mimic real-life conditions. The dVPose dataset[79] offers a realistic multi-modality dataset intended for the development and evaluation of real-time single-shot deep-learning-based 6D pose estimation algorithms on a head-mounted display. It includes comprehensive data for vision-based 6D pose estimation, featuring synchronized images from the RGB, depth, and grayscale cameras of the HoloLens 2 device, and captures the extra-corporeal portions of the instruments and endoscope of a da Vinci surgical robot.

Simulated and synthetic datasets can provide large-scale data and annotations through controlled environments. The Edinburgh Simulated Surgical Tools Dataset[80] includes RGBD images of five simulated surgical tools (two scalpels, two clamps, and one tweezer), featuring both synthetic and real images. Synthetic Surgical Needle 6DoF Pose Datasets[81] were generated with the AMBF simulator and assets from the Surgical Robotics Challenge[82]. These synthetic datasets focus on the 6DoF pose estimation of surgical needles, providing a controlled environment for developing and testing pose estimation algorithms. The POV-Surgery dataset[83] offers a large-scale, synthetic, egocentric collection focusing on pose estimation for hands with different surgical gloves and three orthopedic instruments: scalpel, friem, and diskplacer. It consists of high-resolution RGB-D video streams, activity annotations, accurate 3D and 2D annotations for hand-object poses, and 2D hand-object segmentation masks.

Pose estimation in surgical settings also extends to the use of X-ray imaging. i3PosNet[84] introduced three datasets: two synthetic Digitally Rendered Radiograph (DRR) datasets (one with a screw and the other with two surgical instruments) and a real X-ray dataset with manually labeled screws. These datasets facilitate the study and development of pose estimation algorithms using X-ray images in temporal bone surgery.

Table 1 summarizes the datasets mentioned in this section.

Summarization of datasets

| Category | Dataset |

| Segmentation and detection | EndoVis[50,51], Lap. I2I Translation[65], Sinus-Surgery-C/L[58], CholecSeg8k[59], CaDIS[53], RobustMIS[56], Kvasir-Instrument[60], AutoLaparo[61], SurgToolLoc[64], SAR-RARP50[52], SurT[54], STIR[55], SegSTRONG-C[57] |

| Depth estimation | EndoSLAM[67], SCARED[68], Rectified Hamlyn[70], JHU Nasal Cavity[41], Arthronet[71], AIST Colonoscopy[72], JHU Colonoscopy[73] |

| 3D reconstruction | JIGSAW[75], Lin et al.[74], Hamlyn[69], Rectified Hamlyn[70], EndoSLAM[67], JHU Nasal Cavity[41], JHU Colonoscopy[73] |

| Pose estimation | SurgRIPE[76], Laparoscopic Non-robotic Dataset[77], 3dStool[78], dVPose[79], Edinburgh Simulated Surgical Tools Dataset[80], Synthetic Surgical Needle 6DoF Pose Datasets[81], POV-Surgery[83], i3PosNet[84] |

Segmentation and detection

Segmentation and detection aim to identify the target objects from a static observation and represent them as space occupancy in pixel/voxel level labels or simplified bounding boxes for target instances, respectively. Segmentation and detection methods play a foundational role in performing geometric understanding for a complex scenario like surgery and provide basic geometric information by indicating which areas are occupied by objects. In this section, we focus on the segmentation and detection of videos and images. This section is divided into frame-wise and video-wise segmentation and detection, namely, (a) single frame segmentation and detection and (b) video object segmentation and tracking.

Single frame segmentation and detection

Starting from easier goals with the availability of more accurate and larger-scaled annotation, segmentation and detection in 2D space receive more attention. Due to different demands, the development of the detection and segmentation methods started from different perspectives. Traditional segmentation methods rely on low-level features such as edges and region colors, discriminating regions using thresholding or clustering techniques[85-87]. Traditional detection methods rely on human-crafted features and predict based on template matching[88-90]. With the emergence of deep learning algorithms, both detection and segmentation methods started using convolutional neural networks (CNNs) and adopted an end-to-end training regime.

In the general vision domain, segmentation was initially explored as a semantic segmentation task in the 2D space. As the target labels were generated from a fixed number of candidate classes, the output could be represented in the same format of multidimensional arrays as the input, making the encoder-decoder architecture-based FCN[91] a perfect fit for the task. Subsequent works[92-95] improved the performance by improving the feature aggregation techniques within the encoder-decoder architecture.

Object detection methods, unlike semantic segmentation, require the generation of rectangular bounding boxes for an arbitrary number of detected objects, in the format of a vector consisting of continuous center location and size that represents the spatial extent. The output representation that encompasses an arbitrary number of detected objects distinguishes instances from each other. As a consequence, the simple encoder-decoder architecture no longer meets the requirement. Instead, the detection pipeline based on region proposals represented by R-CNN[96-98] series and YOLO[99]/SSD[100] leads to the rapid emergence and development of two different paradigms of CNN-based object detection methods: two-stage paradigm[101-103] and one-stage paradigm[104-108].

The development paths for detection and segmentation converged with the need for instance-level segmentation. Instance segmentation requires a method to not only generate class labels for pixels but also distinguish instances from each other. Instance segmentation methods[109-112] that adopt a two-stage paradigm follow the “detect then segment” pipeline led by Mask R-CNN[109]. Single-stage paradigm methods[113-116] are free from generating bounding boxes first. The EndoVis instrument segmentation challenge and scene segmentation challenge[50,51] provide surgical-specific benchmarks and methods[117-122] targeting surgical video/images are proposed. These methods adopt architectures from the general computer vision domain, and some explore feature fusion mechanisms and data-inspired training techniques to better suit the training on surgical data. Meanwhile, due to the high-stakes nature of surgical procedures, the robustness of the segmentation and detection method is also an aspect that receives attention[2,56,123-125].

With the rise of vision transformers (ViT), ViT-based methods for segmentation and detection have received more attention. While numerous task-specific architectures have been introduced for semantic segmentation[126,127] and object detection[128-132], universal architectures/methods have also been explored[133,134]. Transformers architecture’s strong ability to deal with large-scale vision and language data and the effort of collecting immense amounts of data from industry lead to the rise of foundation models. CLIP[135] ignited the development of vision-language models[136-138] for segmentation and detection,

There are also methods designed for 3D segmentation or detection[148,149]. However, either their input representation is not visible light imaging like point cloud[150] or image including depth[151], or they require a 3D reconstruction from monocular[152] or multi-view 2D images[153] as a prerequisite for success. These are all beyond the scope of this paper or section.

Video object segmentation and tracking

With the introduction of strong computation resources and large-scale video-based annotation, the success of image-based segmentation and detection has led to the rise of video object segmentation and tracking. Video object segmentation and tracking aim to segment or track the objects with initial segmentation or detection results across the video sequence while maintaining their identities. The initial status can be given by an image-based segmentation or detection algorithm, or through manual annotation. Providing geometric understanding similar to that of instance-based segmentation and detection methods. Video object segmentation and tracking are vital for updating geometric understanding in a dynamic scene.

The development of video object segmentation algorithms mainly focuses on exploring the extraction and aggregation of spatial and temporal features for segmentation propagation and refinement. Temporal features are first explored with test-time online fine-tuning techniques[154,155] and recurrent approaches[156,157]. However, tracking under occlusions is challenging without spatial context. To address this, space-time memory[158-160] incorporates both spatial and temporal context with a running memory maintained for information aggregation. Exploiting the transformer architecture’s robust ability to deal with sequential data, such as videos, video ViT-based methods[161,162] have also showcased favorable results. Cutie[163] incorporates memory mechanism, transformer architecture, and feature fusion, achieving state-of-the-art results in video object segmentation.

The progression of video object tracking has witnessed the emergence of various task variants, such as video object detection[164-167], single object tracking[168-170], and multi-object tracking[171-173]. Traditional algorithms rely on sophisticated pipelines with handcrafted feature descriptors and optical flow for appearance modeling, smoothness assumptions-based motion modeling, object interaction, and occlusion handling, probabilistic inference, and deterministic optimization[174]. Applying neural networks simplifies the pipeline. One aspect of the effort focuses on modeling temporal feature flow or temporal feature aggregations[164-170] for better feature-level correspondence between frames. The other aspect focuses on improving object-level correspondence in multi-object scenarios[171-173].

Video object segmentation and tracking[175] in surgical videos mainly focus on moving objects like surgeons’ hands and surgical instruments. However, work focusing on tracking the tissues is still necessary when there is frequent camera movement. Taking advantage of a limited and sometimes fixed number of objects to track and similar appearance during the surgery, some traditional methods assume models of the targets[176-178], optimize with similarity functions or energy functions with respect to the observations and some low-level features, and apply registration or template matching for prediction. Some[179,180] rely on additional markers and other sensors for more accurate tracking.

Deep learning methods became the dominant approach once they were applied to surgical videos. Since less intra-class occlusion exists in the surgical scene, there is less demand for that sophisticated feature fusion mechanism in general vision. Most works continue using less data-consuming image-based segmentation and detection architectures[181-185] and maintain the correspondence at the result level. There are also works for end-to-end sequence models and spatial-temporal feature aggregation attempts[186,187].

Depth estimation

The goal of depth estimation is to associate with each pixel a value that reflects the distance from the camera to the object in that pixel, for a single timestep. This depth may be expressed in absolute units, such as meters, or in dimensionless units that capture the relative depth of objects in an image. The latter goal often arises for monocular depth estimation (MDE), which is depth estimation using a single image, due to the fundamental ambiguity between the scale of an object and its distance from the camera. Conventional approaches use shadows[188], edges, or structured light[189,190], to estimate relative depth. Although prior knowledge about the scale of specific objects in a scene enables absolute measurement, it was not until the advent of deep neural networks that object recognition became computationally tractable[191]. Stereo depth estimation (SDE), on the other hand, leverages the known geometry of multiple cameras to estimate depth in absolute units, and it has long been studied as a fundamental problem relevant to robotic navigation, manipulation, 3D modeling, surveying, and augmented reality applications[192,193]. Traditional approaches in this area used patch-based statistics[194] or edge detectors to identify sparse point correspondences between images, from which a dense disparity map can be interpolated[193,195]. Alternatively, dense correspondences can be established directly using local window-based techniques[196,197] or global optimization algorithms[198,199], which often make assumptions about surfaces being imaged to constrain the energy function[193]. However, the advent of deep learning revolutionized approaches to both monocular and stereo-depth estimation, proving particularly advantageous for the challenging domain of surgical images.

Within that domain, depth estimation is a valuable step toward geometric understanding in real time. As opposed to 3D reconstruction or structure-from-motion algorithms, as described below, depth estimation requires only a single frame of monocular or stereo video, meaning this geometric snapshot is just as reliable as post-hoc analysis that leverages future frames as well as past ones. Furthermore, it makes no assumptions about the geometric consistency of the anatomy throughout the surgery. In combination with detection and recognition algorithms, such as those above, depth estimation provides a semantically meaningful, 3D representation of the geometry of a surgical procedure, particularly minimally invasive procedures that rely on visual guidance. Traditionally, laparoscopic, endoscopic, and other visible spectrum image-guided procedures relied on monocular vision systems, which were sufficient when provided as raw guidance on a flat monitor[200,201]. The introduction of 3D monitors, which display stereo images using glasses to separate the left- and right-eye images, enabled stereo endoscopes and laparoscopes to be used clinically[202], proving to be invaluable to clinicians for navigating through natural orifices or narrow incisions[200,203]. Robot-assisted surgical robots like the da Vinci surgical system likewise use a 3D display in the surgeon console to provide a sense of depth. The increasing prevalence, therefore, of stereo camera systems in surgery has motivated the development of depth estimation algorithms for both modalities in this challenging domain.

Surgical video poses challenges for MDE in particular because it features deformable surfaces under variable lighting conditions, specular reflectances, and predominant motion along the non-ideal camera axis[204,205]. Deep neural networks (DNNs) offer a promising pathway for addressing these challenges by learning to regress dense depth maps consistently based on a priori knowledge of the target domain in the training set[206]. In this context, obtaining reliable ground truth is of the highest importance, and in general, this is obtained using non-monocular methods, while the DNN is restricted to analyzing the monocular image. Visentini-Scarzanella et al.[207] and Oda et al.[208], for example, obtained depth maps for colonoscopic video by rendering depth maps based on a reconstruction of the colon from CT. A more scalable approach uses the depth estimate from stereo laparoscopic[209] or arthroscopic[210] video, but this still requires stereo video data, which may not be available for procedures typically performed with monocular scopes. Using synthetic data rendered from photorealistic virtual models is a highly scalable method for generating images and depth maps, but DNNs must then overcome the sim-to-real gap[211-215]. Refs[204,216] explore the idea of self-supervised MDE by reconstructing the anatomical surface with structure-from-motion techniques but restricting the DNN to a single frame, later used widely[217,218]. Incorporating temporal consistency from recent frames can yield further improvements[219,220]. Datasets such as EndoSLAM[67] for endoscopy, SCARED[68] for laparoscopy, ref[71] for arthroscopy, and ref[221] for colonoscopy use these methods to make ground truth data more widely available for training[218,222-224]. For many of these methods, a straightforward DNN architecture with an encoder-decoder structure and straightforward loss function was used[204,211,212,216], although geometric constraints such as edge[208,225] or surface[223] consistency may be incorporated into the loss function. More drastic innovations explicitly confront the unique challenges of surgical videos, such as artificially removing smoke, which is frequently present due to cautery tools, using a combined GAN and U-Net architecture that simultaneously estimates depth[226].

As in detection and recognition tasks, large-scale foundation models have been developed with MDE in mind. The Depth-Anything model leverages both labeled (1.5M) and unlabeled (62M) images from real-world datasets to massively scale up the data available for training DNNs[227]. Rather than using structure-from-motion or other non-monocular methods to obtain ground truth for unlabeled data, Depth-Anything uses a previously obtained MDE teacher model to generate pseudo-labels for unlabeled images, preserving semantic features between the student and teacher models. Although trained using real-world images, Depth Anything’s zero-shot performance on endoscopic and laparoscopic video is nevertheless comparable to specialized models in terms of speed and performance[228]. It remains to be seen whether foundational models trained on real-world images will yield substantial benefits for surgical videos after fine-tuning or other transfer learning methods are explored.

For stereo depth estimation, DNNs have likewise shown vast improvements over conventional approaches. The ability of CNNs to extract salient local features has proved efficacious for establishing point correspondences based on image appearance, compared to handcrafted local or multi-scale window operators[229,230], leading to similar approaches on stereo laparoscopic video[231,232]. With regard to dense SDE, however, the ability of vision transformers[233] to train attention mechanisms on sequential information has proved especially apt for overcoming the challenges of generating globally consistent disparity maps, especially over smooth, deformable, or highly specular surfaces often encountered in surgical video[234-237]. When combined with object recognition and segmentation networks, as described above, they can be used to contend with significant occlusions such as those persistent in laparoscopic video from robotic-assisted minimally invasive surgery (RAMIS)[236].

3D reconstruction

Going a step beyond recognition, segmentation, and depth estimation, 3D reconstruction aims to generate explicit geometric information about a scene. In contrast to the depth estimation explained above, where the distance between the object and camera is represented as a distance value in a pixel, 3D reconstructed scenes are represented either using discrete representation (point cloud or mesh grid) or continuous representation (Neural Fields). In the visible light domain, 3D reconstruction refers to the intraoperative 3D reconstruction of surgical scenes, including anatomical tissues and surgical instruments. While it has been traditionally employed to reconstruct static tissues and organs, recently, novel techniques have been introduced for the 3D reconstruction of deformable tissues and to update preoperative 3D models based on intraoperative anatomical changes. Since most of the preoperative imaging modalities, such as CT and MRI, are 3D, inter-operative 3D reconstructive enables 3D-3D registration[41,210,238-241]. This makes real-time visible light imaging-based 3D reconstruction a key geometric understanding task that can aid surgical navigation, surgeon-centered augmented reality, and virtual reality[236].

3D reconstruction methods often use multiple images, acquired either altogether or at various times, to reconstruct a 3D model of the scene. Conventional reconstruction methods that estimate 3D structures from multiple 2D images include Structure from Motion (SfM)[242] and Simultaneous Localization and Mapping (SLAM)[243-247]. Similar to stereo depth estimation techniques, these methods fundamentally rely on motion parallax, the difference in object visualization from different image/camera viewpoints, for accurate estimation of the 3D structure of the object. One of the necessary tasks in estimating structure from motion is finding the correspondence between the different 2D images. Geometric information processing plays a key role in detecting and tracking features to establish correspondence. Such feature detection techniques include scale-invariant feature transform (SIFT) and Speeded-Up Robust Features (SURF). Alternatively, as visible light imaging-based surgical procedures are often equipped with stereo cameras, the use of depth estimation for the reconstruction of surgical scenes has also been reported[248]. Utilizing the camera pose information, the SLAM-based method allows the surgical scene reconstruction by fusing the depth information in the 3D space[244-246]. Although SfM and SLAM have shown promising performance in the natural computer vision domain, their application in the surgical domain has been limited, in part due to the paucity of features in the limited field of view. Additionally, these techniques assume the object to be static and rigid, which is not ideal for surgical scenes as the tissues/organs undergo deformations. The low-light imaging conditions, presence of bodily fluids, occlusions resulting from instrument movements, and specular reflections further affect the 3D reconstructions.

Discrete representation methods benefit from their sparsity properties, which improve efficiency in surface production. However, the same property also makes the representation method less robust in handling complex high-dimensional changes (non-topological deformations and color changes) - a general norm in surgical scenes – due to instrument-tissue interactions[249]. To address the deformations in tissue structures to an extent, sparse warp fields[250] have also been introduced in SuPer[251] and E-DSSR[236]. Novel techniques are also being explored for updating the preoperative CT models based on the soft tissue deformations and ablations observed through intraoperative endoscopic imaging[252]. Unlike discrete representation methods[248,249,253], emerging methods now employ continuous representations with the introduction of the Neural Radiance Field (NeRF) to reconstruct deformable tissues. Using the time-space input, the complex geometry and appearance are implicitly modeled to achieve high-quality 3D reconstruction[248,249]. EndoNeRF[248] employed two neural fields, where one is trained for tissue deformation and the other is trained for canonical density and appearance. It represents the deformable surgical scene as canonical neural radiance combined with a time-dependent neural displacement field. Advancing this further, EndoSurf[249] employs three neural fields to model the surgical dynamics, shape, and texture.

Pose estimation

Pose estimation aims to estimate the geometric relationship between an image and a prior model, which can take several forms[254]. These include rigid surface models, dense deformable surfaces, point-based skeletons, and robot kinematic models[254,255]. Many of the same techniques and variations employed in depth estimation and 3D reconstruction tasks also apply here, including feature-based matching with the perspective-n-point problem[255] and end-to-end learning-based approaches[256]. For point-based skeletons, such as the human body, pose estimation relied on handcrafted features[257] before the advent of deep neural networks[258]. In the context of surgical data science, pose estimation is highly relevant given the amount of a priori information going into any surgery, which can be leveraged to create 3D models. These include surgical tool models, robot models, and patient images. The 6DoF pose estimation of surgical tools, for example, in relation to patient anatomy, can enable algorithms that anticipate surgical errors and mitigate the risk of injuries[254]. By identifying tools’ proximity to critical structures, pose estimation technologies can ensure safer operations[259]. This is further advanced by precise 6DoF pose estimation of both instruments and tissue.

Deep neural networks have been shown to demonstrate promising outcomes for object pose estimation in RGB images[254,260-263]. Modern approaches often involve training models to regress 2D key points instead of directly estimating the object pose. These key points are then utilized to reconstruct the 6DoF object pose through the perspective-n-point (PnP) algorithm, with techniques showing robust performance, even in scenarios with occlusions[260].

Hand pose estimation also benefits from these technological advancements, with several methods proposed for deducing hand configurations from single-frame RGB images[264]. This capability is crucial for understanding the interactions between surgical tools and the operating environment, offering insights into the precise manipulation of instruments.

Beyond tool and hand pose estimation, human pose estimation can be applied for a broad spectrum of clinical applications, including surgical workflow analysis, radiation safety monitoring, and enhancing human-robot cooperation[265,266]. By leveraging videos from ceiling-mounted cameras, which capture both personnel and equipment in the operating room, human pose estimation can identify the finer activities within surgical phases, such as interactions between clinicians, staff, and medical equipment. The feasibility of estimating the poses of the individuals in an operating room, utilizing color images, depth images, or a combination of both, opens possibilities for real-time analysis of clinical environments[267].

APPLICATIONS OF GEOMETRIC SCENE UNDERSTANDING EMPOWERED DIGITAL TWINS

Geometric scene understanding plays a pivotal role in developing the DT framework by enabling the creation and real-time refinement of digital models based on real-world observations. Geometric information processing is crucial here for precise representation, visualization, and model interaction. Section “GEOMETRIC SCENE UNDERSTANDING TASKS” outlined the methods for processing this information, critical for navigating the complex geometry of surgical settings - identifying shapes, positions, and movements of anatomical features and tools. This section delves into the integration of geometric scene understanding within the DT framework, emphasizing its successful applications. It offers valuable insights that could be leveraged or specifically adapted to further the development of DT technologies in surgery.

Simulators serve as the essential infrastructure for creating, maintaining, and visualizing a DT of the physical world, crucially facilitating the collection and transmission of data back to the real world[268,269]. The Asynchronous Multi-Body Framework (AMBF) has demonstrated success in this regard through its applications in the surgical domain[270,271]. Building on this foundation, the fully immersive virtual reality for skull-base surgery (FIVRS) infrastructure, developed using AMBF, has been applied to DT frameworks and applications, demonstrating significant advancements in the field[7,272-275]. Twin-S, a DT framework designed for skull base surgery, leverages high-precision optical tracking and real-time simulation to model, track, and update the virtual counterparts of physical entities - such as the surgical drill, surgical phantom, tool-to-tissue interaction, and surgical camera - with high accuracy[7]. Additionally, this framework can be integrated with vision-based tracking algorithms[256], offering a potential alternative to optical trackers, thus enhancing its versatility and application scope. Contributing further to the domain, a collaborative robot framework has been developed to improve situational awareness in skull base surgery. This framework[274] introduces haptic assistive modes that utilize virtual fixtures based on generated signed distance fields (SDF) of critical anatomies from preoperative CT scans, thereby providing real-time haptic feedback. The effective communication between the real environment and the simulator is facilitated by adopting the Collaborative Robotics Toolkit (CRTK)[276] convention, which promotes modularity and seamless integration with other robotic systems. Additionally, an open-source toolset that integrates a physics-based constraint formulation framework, AMBF[270,271], with a state-of-the-art imaging platform application, 3D Slicer[277], has been developed[275]. This innovative toolset enables the creation of highly customizable interactive digital twins, incorporating the processing and visualization of medical imaging, robot kinematics, and scene dynamics for real-time robot control.

In addition to AMBF-empowered DT models, other DT models have also been proposed and explored for various surgical procedures. In liver surgery, a novel integration of thermal ablation with holographic augmented reality (AR) and DTs offers dynamic, real-time 3D navigation and motion prediction to improve accuracy and real-time performance[278]. Similarly, in the realm of cardiovascular interventions, the development of patient-specific artery models for coronary stenting simulations employs digital twins to personalize treatments. This approach uses finite element models derived from 3D reconstructions to validate in silico stenting procedures against actual clinical outcomes, underlining the move toward personalized care[279]. Orthopedic surgery benefits from applying DTs in evaluating the biomechanical effectiveness of stabilization methods for tibial plateau fractures generated from postoperative 3D X-ray images, aiding in optimizing surgical strategies and postoperative management[280]. The utilization of DT, AI, and machine learning to identify personalized motion axes for ankle surgery also marks a significant advancement, promising improvements in total ankle arthroplasty by ensuring the precise alignment of implants according to the specific anatomy of each patient[281]. Moreover, introducing a method to synchronize real and virtual manipulations in orthopedic surgeries through a dynamic DT enables surgeons to monitor and adjust the patient’s joint in real time with visual guidance. This technique not only ensures accurate alignments and adjustments during procedures but also significantly improves joint surgery outcomes.

The potential of DTs extends further when considering their role in enhancing higher-level downstream applications, ranging from surgical phase recognition and gesture classification to intraoperative guidance systems. Leveraging geometric understanding, DTs can interpret the broader context and flow of surgical operations, thereby increasing the precision and safety of interventions. Surgical phase recognition, for instance, utilizes insights from both direct video sources and interventional X-ray sequences to accurately identify the stages of a surgical procedure[11-18,282]. This facilitates a more structured and informed approach to surgery, enhancing the decision-making process and the efficacy of robotic assistants[14,19-21].

Furthermore, evaluating and enhancing surgical workflows and skills through these technologies can significantly advance surgeon training. By providing objective, quantifiable feedback on surgical techniques, DTs can support a comprehensive approach to assessing and improving surgical proficiency. This not only aids in training novices but also enhances performance evaluation across a spectrum of surgeons, from novices to experts, including those performing robot-assisted procedures[20,22,23,283-285]. The development of advanced cognitive surgical assistance technologies, based on the analysis of surgical workflows and skills, represents another opportunity. These technologies have the potential to enhance operational safety and foster semi-autonomous robotic systems that anticipate and adapt to the surgical team’s needs, thereby improving the collaborative efficiency of the surgical environment[1,3,286].

Intraoperative guidance technologies offer surgeons improved precision and real-time feedback[6]. Innovations such as mixed reality overlays and virtual fixtures could see their utility and efficacy greatly enhanced through integration with DTs. This synergy could further refine surgical accuracy and patient safety. Moreover, advancements like tool and needle guidance systems, alongside automated image acquisition[4,8], exemplify progress in geometric understanding and digital innovation. Integrating DTs with these surgical technologies holds the potential to improve standards for minimally invasive procedures and overall surgical quality.

Table 2 summarizes important methods for geometric scene understanding tasks and applications.

Summarization of methods

| Category | Methods |

| Segmentation and detection | EFFNet[117], Bamba et al.[118], Cerón et al.[119], Wang et al.[120], Zhang et al.[121], Yang et al.[122], CaRTS[2], TC-CaRTS[123], Colleoni et al.[124], daVinci GAN[125], AdptiveSAM[145], SurgicalSAM[146], Stenmark et al.[179], Cheng et al.[180], Zhao et al.[181,186], Robu et al.[182], Lee et al.[183], Seenivasan et al.[95], García-Peraza-Herrera et al.[184], Jo et al.[185], Alshirbaji et al.[187] |

| Depth estimation | Hannah[194], Marr and Poggio[192], Arnold[196], Okutomi and Kanade[197], Szeliski and Coughlan[198], Bouguet and Perona[188], Iddan and Yahav[189], Torralba and Oliva [191], Mueller-Richter et al.[201], Stoyanov et al.[195], Nayar et al.[190], Lo et al.[199], Sinha et al.[203], Liu et al.[206], Bogdanova et al.[202], Sinha et al.[200], Visentini-Scarzanella et al.[207], Mahmood et al.[211], Zhan et al.[209], Liu et al.[204], Guo et al.[212], Wong and Soatto[216], Chen et al.[213], Li et al.[205], Liu et al.[210], Schreiber et al.[214], Tong et al.[215], Widya et al.[217], Ozyoruk et al.[67], Allan et al.[68], Hwang et al.[219], Szeliski[193], Oda et al.[208], Shao et al.[218], Li et al.[220], Tukra and Giannarou[222], Ali and Pandey[71], Masuda et al.[221], Zhao et al.[223], Han et al.[224], Yang et al.[225], Zhang et al.[226] |

| 3D reconstruction | Dynamicfusion[250], Lin et al.[243], Song et al.[244], Zhou and Jagadeesan[245], Wdiya et al.[242], Li et al.[251], Wei et al.[247], EMDQ-SLAM[246], E-DSSR[236], EndoNeRF[248], EndoSurf[249], Nguyen et al.[253], Mangulabnan et al.[252] |

| Pose estimation | Hein et al.[254], Félix et al.[255], Tatoo[256], Allan et al.[259], Kadkhodamohammadi et al.[265], Padoy[266], Kadkhodamohammadi et al.[267] |

| Applications | FIVRS[272], Ishida et al.[273], Ishida et al.[274], Sahu et al.[275], Twin-S[7], Shi et al.[278], Poletti et al.[279], Aubert et al.[280], Hernigou et al.[281] |

DISCUSSION

The concept of DTs is rapidly gaining momentum in various surgical procedures, showcasing its transformative potential in shaping the future of surgery. The introduction of DT across various surgical procedures such as skull base surgery[7,272-275], liver surgery[278], cardiovascular intervention[279], and orthopedic surgery[280,281] demonstrate the broad effectiveness of DT technology in improving surgical precision and patient outcomes across different medical specialties. As stated in Section “APPLICATIONS OF GEOMETRIC SCENE UNDERSTANDING EMPOWERED DIGITAL TWINS”, the emergence of novel geometric scene understanding applications, such as phase recognition and gesture classification, could further empower DT models to interpret the broader context and flow of surgical operations. The potential ability to derive context-aware intelligence from a deep understanding of surgical dynamics further emphasizes DTs’ potential to advance robot-assisted surgeries and procedural planning. Geometric scene understanding-empowered DTs offer an alternate approach to the holistic understanding of the surgical scene through virtual models and have the potential to subsequently enhance the surgical process, from planning and execution to training and postoperative analysis, driving the digital revolution in surgery.

Geometric scene understanding forms the backbone of DTs that incorporate and process diverse enriched data from different stages of surgery. The evolution of geometric information processing has transitioned from simple low-level feature processing to neural network-based methods, introducing innovative geometric representations like neural fields. Various geometric scene understanding tasks have also been established, with corresponding methods achieving significant performance improvements. However, challenges persist in the representation of geometric information, the development of geometric scene understanding within the surgical domain, and its application to the DT paradigm.

In geometric representations, there is no single form that can meet all the requirements of DT in terms of accuracy, applicability, efficiency, interactivity, and reliability. Grid-based methods compromise the accuracy and processing efficiency for convenient structure and representation ability. It also lacks interactivity and reliability, as the geometric information is mainly represented by the aggregation of adjacent pixels. Point-based representations provide accurate positions for each point. It also allows the establishment of explicit connections between points to form boundaries, improving rendering efficiency and providing more geometric constraints for interaction and interpretation. Thus, polygon mesh representation has been widely used in 3D surface reconstruction and digital modeling. However, it is computationally less efficient, and the sparsity of the points still limits the accuracy. Latent space representation, while offering the possibility of dimensional reduction (ruled encoding) for efficiency and high-level semantic incorporation (neural encoding), lacks accuracy due to information loss and reliability due to limited interpretability and generalizability. Functional representations offer a new approach to representing geometric information through mathematical constraints or mappings. Ruled functions have the advantages of processing efficiency, easy interactivity, and quantized interpretability. However, it lacks the ability to represent complex surfaces. On the other hand, the neural fields[48,49], leveraging on neural networks’ universal approximation ability, demonstrate remarkable representation capabilities. This enables the efficient continuous 3D representation of complex and dynamic surgical scenes, which makes it a popular topic. However, the use of black-box networks sacrifices interactivity and interpretability. While no one geometric representation is optimal for all cases, the current advances require the user to choose data presentation based on the trade-off and the task-specific priority. For tasks that require robust performance in all aspects, future work could explore novel data representation that fuses sparse representations and neural fields, to achieve better surface representation with lesser computation load.

Geometric scene understanding has made immense strides in the general computer vision domain. However, its progress in the surgical domain is hindered by multiple factors. Firstly, limited data availability and annotations have become a major roadblock in adapting advanced but data-consuming architectures like ViT[233] from the computer vision domain. This significantly impacts the accuracy and reliability of segmentation and detection, which are prerequisites for the success of DT. Although self-supervised techniques of using stereo matching exist that might exempt depth estimation from lack of annotations, the stability[287] of training needs careful attention. While efforts have been made to bridge the gap in the scale of data between computer vision and the surgical domain through synthetic data generation and sim-to-real generalization techniques[288,289], this direction also poses challenges due to the lack of interpretability for neural networks. Secondly, the complexity of the surgical scene, including non-static organs and deformable tissues, poses another major challenge when updating the DT models relies solely on pose estimation, with the assumption that 3D models are rigid. Although dynamic 3D reconstruction methods exist[248,249], they cannot currently be processed in real time for updating geometric information and require auxiliary constraints like stereo matching for plausible output. The lack of observability due to limited operational space is also a major challenge that leads to a paucity of features for geometric scene understanding. Incorporating other modalities like robot kinematics[2] and temporal constraints[123] can be a complement under this situation. The emergence of foundation models also offers an alternate approach to harness the power of foundation models trained on enormous natural images to address the aforementioned challenges in the surgical domain[146,145]. However, the domain gap may hinder the optimal extraction of precise features[147] and may require further work to extend them in the surgical domain.

CONCLUSION

Surgical data science, benefiting from the advent of end-to-end deep learning architectures, is also hindered by their lack of reliability and interoperability. The DT paradigm is envisioned to advance the surgical data science domain further, introducing new avenues of research in surgical planning, execution, training, and postoperative analysis by providing a universal digital representation that enables robust and interpretable surgical data science research. Geometric scene understanding is the core building block of DT and plays a pivotal role in building and updating digital models. In this review, we find that the existing geometric representation and well-established tasks provide fundamental materials and tools to implement the DT framework and have led to the emergence of successful applications. However, challenges remain in employing more advanced but data-consuming methods especially in segmentation, detection, and monocular depth estimation tasks in the surgical domain due to a lack of annotations and a gap in the scale of the data. The complexity of the surgical scene due to the large portion of dynamic and deformable tissues, and the lack of observability due to limited operational space are also common factors that hinder the development of geometric scene understanding tasks, especially for the 3D reconstruction that demands multi-view observations. To address these challenges, numerous approaches, including synthetic image generation, sim-to-real generalization, auxiliary data incorporation, and foundational model adaptation, are being explored. Among all of these methods, the auxiliary data incorporation and foundation models present the most promising improvement. Since the auxiliary data is not always available and the exploration of the foundation models in surgical data science is still preliminary, it is expected to see more advancement in this direction that improves the geometric scene understanding performance and further promotes DT research. Developing an accurate, efficient, interactive, and reliable DT requires robust and efficient holistic geometric representation and combinations of effective geometric scene understanding, to build and update digital model pipelines in real time.

DECLARATIONS

Authors’ contributions

Initial writing of the majority part and coordination of the collaboration among authors: Ding H

Initial writing of the 3D reconstruction, integration, and revision of the paper: Seenivasan L

Initial writing of the depth estimation and pose estimation part and revision of the paper: Killeen BD

Initial writing of the pose estimation and application part: Cho SM

The main idea of the paper, overall structure, and revision of the paper: Unberath M

Availability of data and materials

See Section “Availability of data and materials” in the main text.

Financial support and sponsorship

This research is in part supported by (1) the collaborative research agreement with the Multi-Scale Medical Robotics Center at The Chinese University of Hong Kong; (2) the Link Foundation Fellowship for Modeling, Training, and Simulation; and (3) NIH R01EB030511 and Johns Hopkins University Internal Funds. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Conflict of interest

All authors declared that there are no conflicts of interest.

Ethical approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Copyright

© The Author(s) 2024.

REFERENCES

1. Maier-Hein L, Eisenmann M, Sarikaya D, et al. Surgical data science - from concepts toward clinical translation. Med Image Anal. 2022;76:102306.

2. Ding H, Zhang J, Kazanzides P, Wu JY, Unberath M. CaRTS: causality-driven robot tool segmentation from vision and kinematics data. In: Wang L, Dou Q, Fletcher PT, Speidel S, Li S, editors. Medical Image Computing and Computer Assisted Intervention - MICCAI 2022. Cham: Springer; 2022. pp. 387-98.

3. Kenngott HG, Wagner M, Preukschas AA, Müller-Stich BP. [Intelligent operating room suite: from passive medical devices to the self-thinking cognitive surgical assistant]. Chirurg. 2016;87:1033-8.

4. Killeen BD, Gao C, Oguine KJ, et al. An autonomous X-ray image acquisition and interpretation system for assisting percutaneous pelvic fracture fixation. Int J Comput Assist Radiol Surg. 2023;18:1201-8.

5. Gao C, Killeen BD, Hu Y, et al. Synthetic data accelerates the development of generalizable learning-based algorithms for X-ray image analysis. Nat Mach Intell. 2023;5:294-308.

6. Madani A, Namazi B, Altieri MS, et al. Artificial intelligence for intraoperative guidance: using semantic segmentation to identify surgical anatomy during laparoscopic cholecystectomy. Ann Surg. 2022;276:363-9.

7. Shu H, Liang R, Li Z, et al. Twin-S: a digital twin for skull base surgery. Int J Comput Assist Radiol Surg. 2023;18:1077-84.

8. Killeen BD, Winter J, Gu W, et al. Mixed reality interfaces for achieving desired views with robotic X-ray systems. Comput Methods Biomech Biomed Eng Imaging Vis. 2023;11:1130-5.

9. Killeen BD, Chaudhary S, Osgood G, Unberath M. Take a shot! Natural language control of intelligent robotic X-ray systems in surgery. Int J Comput Assist Radiol Surg. 2024;19:1165-73.

10. Kausch L, Thomas S, Kunze H, et al. C-arm positioning for standard projections during spinal implant placement. Med Image Anal. 2022;81:102557.

11. Killeen BD, Zhang H, Mangulabnan J, et al. Pelphix: surgical phase recognition from X-ray images in percutaneous pelvic fixation. In: Greenspan H, Madabhushi A, Mousavi P, Salcudean S, Duncan J, Syeda-mahmood T, Taylor R, editors. Medical Image Computing and Computer Assisted Intervention - MICCAI 2023. Cham: Springer; 2023. pp. 133-43.

12. Garrow CR, Kowalewski KF, Li L, et al. Machine learning for surgical phase recognition: a systematic review. Ann Surg. 2021;273:684-93.

13. Weede O, Dittrich F, Worn H, et al. Workflow analysis and surgical phase recognition in minimally invasive surgery. In: 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO); 2012 Dec 11-14; Guangzhou, China. IEEE; 2012. pp. 1080-74.

14. Kiyasseh D, Ma R, Haque TF, et al. A vision transformer for decoding surgeon activity from surgical videos. Nat Biomed Eng. 2023;7:780-96.

15. Ban Y, Eckhoff JA, Ward TM, et al. Concept graph neural networks for surgical video understanding. IEEE Trans Med Imaging. 2024;43:264-74.

16. Czempiel T, Paschali M, Keicher M, et al. TeCNO: surgical phase recognition with multi-stage temporal convolutional networks. In: Martel AL, Abolmaesumi P, Stoyanov D, Mateus D, Zuluaga MA, Zhou SK, Racoceanu D, Joskowicz L, editors. Medical Image Computing and Computer Assisted Intervention - MICCAI 2020. Cham: Springer; 2020. pp. 343-52.

17. Guédon ACP, Meij SEP, Osman KNMMH, et al. Deep learning for surgical phase recognition using endoscopic videos. Surg Endosc. 2021;35:6150-7.

18. Murali A, Alapatt D, Mascagni P, et al. Encoding surgical videos as latent spatiotemporal graphs for object and anatomy-driven reasoning. In: Greenspan H, et al., editors. Medical Image Computing and Computer Assisted Intervention - MICCAI 2023. Cham: Springer; 2023. pp. 647-57.

19. Zhang D, Wang R, Lo B. Surgical gesture recognition based on bidirectional multi-layer independently RNN with explainable spatial feature extraction. In: 2021 IEEE International Conference on Robotics and Automation (ICRA); 2021 May 30 - Jun 5; Xi’an, China. IEEE; 2021. pp. 1350-6.

20. DiPietro R, Ahmidi N, Malpani A, et al. Segmenting and classifying activities in robot-assisted surgery with recurrent neural networks. Int J Comput Assist Radiol Surg. 2019;14:2005-20.

21. Dipietro R, Hager GD. Automated surgical activity recognition with one labeled sequence. In: Shen D, et al., editors. Medical Image Computing and Computer Assisted Intervention - MICCAI 2019. Cham: Springer; 2019. pp. 458-66.

22. Reiley CE, Lin HC, Yuh DD, Hager GD. Review of methods for objective surgical skill evaluation. Surg Endosc. 2011;25:356-66.

23. Lam K, Chen J, Wang Z, et al. Machine learning for technical skill assessment in surgery: a systematic review. NPJ Digit Med. 2022;5:24.

24. Alapatt D, Murali A, Srivastav V, Mascagni P, Consortium A, Padoy N. Jumpstarting surgical computer vision. arXiv. [Preprint.] Dec 10, 2023 [accessed 2024 Jul 2]. Available from: https://arxiv.org/abs/2312.05968.

25. Ramesh S, Srivastav V, Alapatt D, et al. Dissecting self-supervised learning methods for surgical computer vision. Med Image Anal. 2023;88:102844.

26. Geirhos R, Rubisch P, Michaelis C, Bethge M, Wichmann FA, Brendel W. Imagenet-trained cnns are biased towards texture; increasing shape bias improves accuracy and robustness. arXiv. [Preprint.] Nov 29, 2018 [accessed 2024 Jul 2]. Available from: https://arxiv.org/abs/1811.12231.

27. Glocker B, Jones C, Roschewitz M, Winzeck S. Risk of bias in chest radiography deep learning foundation models. Radiol Artif Intell. 2023;5:e230060.

28. Geirhos R, Jacobsen J, Michaelis C, et al. Shortcut learning in deep neural networks. Nat Mach Intell. 2020;2:665-73.

29. Wen C, Qian J, Lin J, Teng J, Jayaraman D, Gao Y. Fighting fire with fire: avoiding dnn shortcuts through priming. Available from: https://proceedings.mlr.press/v162/wen22d.html. [Last accessed on 2 Jul 2024]

30. Olah C, Satyanarayan A, Johnson I, et al. The building blocks of interpretability. Distill. 2018;3:e10.

32. Bjelland Ø, Rasheed B, Schaathun HG, et al. Toward a digital twin for arthroscopic knee surgery: a systematic review. IEEE Access. 2022;10:45029-52.

33. Erol T, Mendi AF, Doğan D. The digital twin revolution in healthcare. In: 2020 4th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT); 2020 Oct 22-24; Istanbul, Turkey. IEEE; 2020. pp. 1-7.

34. Representations of geometry for computer graphics. Available from: https://graphics.stanford.edu/courses/cs233-24-winter-v1/ReferencedPapers/60082881-Presentations-of-Geometry-for-Computer-Graphics.pdf. [Last accessed on 2 Jul 2024].

35. Levoy M, Whitted T. The use of points as a display primitive. 2000. Available from: https://api.semanticscholar.org/CorpusID:12672240. [Last accessed on 2 Jul 2024].

36. Botsch M, Kobbelt L, Pauly M, Alliez P, Levy B. Polygon mesh processing. A K Peters/CRC Press; 2010. Available from: http://www.crcpress.com/product/isbn/9781568814261. [Last accessed on 2 Jul 2024]

37. Jolliffe IT, Cadima J. Principal component analysis: a review and recent developments. Philos Trans A Math Phys Eng Sci. 2016;374:20150202.

38. Blanz V, Vetter T. A morphable model for the synthesis of 3D faces. In: Whitton MC, editor. Seminal graphics papers: pushing the boundaries. New York: ACM; 2023. pp. 157-64.

39. Edwards GJ, Taylor CJ, Cootes TF. Interpreting face images using active appearance models. In: Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition; 1998 Apr 14-16; Nara, Japan. IEEE; 1998. pp. 300-5.

40. Karamizadeh S, Abdullah SM, Manaf AA, Zamani M, Hooman A. An overview of principal component analysis. J Signal Inf Process. 2013;4:173-5.

41. Liu X, Killeen BD, Sinha A, et al. Neighborhood normalization for robust geometric feature learning. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2021 Jun 20-25; Nashville, TN, USA. IEEE; 2021. pp. 13049-58.

42. Drenkow N, Sani N, Shpitser I, Unberath M. A systematic review of robustness in deep learning for computer vision: mind the gap? arXiv. [Preprint.] Dec 1, 2021 [accessed 2024 Jul 2]. Available from: https://arxiv.org/abs/2112.00639.

45. Hornik K, Stinchcombe M, White H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989;2:359-66.